Static secrets

Overview

With the initial cluster configuration completed and auth methods enabled, you are now ready to enable your first use case. While Vault has many capabilities around cryptography and secrets management, the initial use-case is often the manual creation of static secrets and secure storage and retrieval.

Vault's KV secrets engine stores arbitrary static secrets. Secrets can be anything from passwords to database connection strings to API keys. Vault stores these securely, and the nature of the static secret is unimportant. What is important to consider is the workflow around the lifecycle of the secrets, namely who or what has permission to create, read, update, and delete any particular secret.

Vault handles this through the use of authentication and policy:

Authentication - Any interaction with Vault must be first authenticated. Authentication verifies the identity of a Vault client (e.g. user, machine, or application) to interact with Vault. It does not define any permissions on what that entity can look at inside Vault.

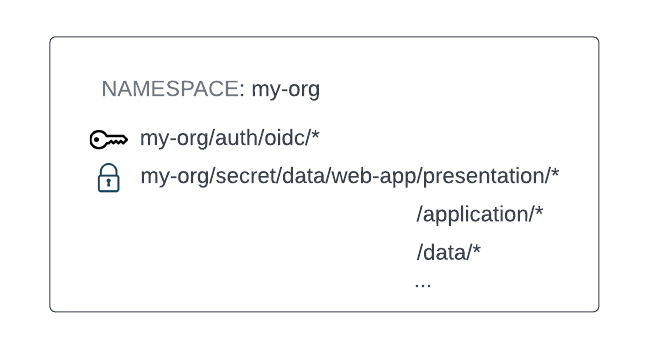

Policy - Policies are associated with Vault tokens and define both what secrets the authenticated client can interact with and in what ways. For example:

In this section, we will:

- Discuss static secrets and what you should consider when laying out your secrets schema

- Discuss policies and what you should consider when creating them

- Discuss secret consumption and what you should consider when planning this strategy

Static secrets management

At this point, you should have one human auth method (OIDC or LDAP) and one machine auth method (AWS or AppRole) enabled, but no secrets in Vault and no policies set up to enforce access permissions of an authenticated entity.

Before you populate Vault with any static secrets, it is important to understand how Vault stores static secrets and plan how you will organize them.

Key/Value (KV) secrets engine

The KV secrets engine is a generic key-value store used to store arbitrary secrets within the configured physical storage for Vault. Secrets written to Vault are encrypted and then written to backend storage. Therefore, the backend storage mechanism never sees the unencrypted value and doesn't have the means necessary to decrypt it without Vault.

The KV secrets engine comes in two versions: version 1 and version 2. Version 2 provides secret versioning and utilizes a different API compared to version 1. KV v1 is more performant than v2 since there are fewer storage calls due to the lack of additional metadata or history being stored. Migration from v1 to v2 is possible but requires that the secret engine be taken offline during the migration. The process could potentially take a long time depending on the amount of data stored. In general, the additional features provided by KV v2 offset the additional write and storage overhead. Therefore, KV v2 is recommended by default unless you have specific performance requirements. Working with KV v1 is out of scope for this document.

Working with the KV secrets engine is straightforward. You can interact with the secrets engine through the UI, CLI, or API. Vault static secrets are laid out like a virtual filesystem. The path where you enable the KV secrets engine acts as the root of the file system. Everything after the root is a key-value pair to write to the secrets engine. For example, the commands below first enables the KV v2 secrets engine at the path kvv2/, then writes a new key-value secret to the path myapp, with a key of foo and value of bar. Finally, it reads the secret using the vault kv get command.

$ vault secrets enable -version=2 -path="kvv2" \

-description="Demo K/V v2" kv

Success! Enabled the kv secrets engine at: kvv2/

$ vault kv put -mount=kvv2 myapp foo=bar

= Secret Path =

kvv2/data/myapp

======= Metadata =======

Key Value

--- -----

created_time 2023-09-18T20:32:54.482700528Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

$ vault kv get -mount=kvv2 myapp

= Secret Path =

kvv2/data/myapp

======= Metadata =======

Key Value

--- -----

created_time 2023-09-18T20:32:54.482700528Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

foo bar

Reference Material:

Mount and path structure

Before writing any data to the KV secrets engine, it is important to consider how to organize the data under the secrets engine mount. The KV structure is important because it helps to simplify and reduce the number of Vault policies by grouping similar data under the same path.

Antipatterns for KV path structure

Some common antipatterns when designing a KV path structure are detailed below.

Creating paths based on environments

For example secret/my-app/dev/*, secret/my-app/uat/*, secret/my-app/prod/*. We do not recommend creating paths based on environments. Customers wanting to have Vault in different environments should run a separate Vault instance in each environment. For instance, if you have a dev, prod, and preprod environment, you should have a Vault instance in dev, prod, and preprod as well. If this is not feasible due to cost and overhead, you should at a minimum separate prod and non-prod. This allows Vault admins to:

- Have a process for letting devs add secrets in lower environments

- Maintain the same path structure across all environments, which minimizes need for application code changes.

Note

You should consider the increased load on your production cluster from non-prod clients, which might require you to provision hardware with better compute and storage performance.Creating paths based on teams

We also do not recommend creating paths based on teams. This antipattern tightly couples application code to organizational topology, which may prove confusing in the event of team restructuring. We recommend instead to design paths based on the application concern. Generically, structure your paths as such: <app-name>/<service>/<component>/secret. For example, in a webstore app, you might have a billing service and an identity service, and within them you have secrets for specific components:

webapp/billing/checkout/<secret1>

webapp/identity/password-reset/<secret1>

Recommended KV mount and path structure

We recommend that you mount a single KV v2 secrets engine with sub-paths per application concern. For example, in a webstore app with a 3-tier architecture (presentation, application, data), the mount structure would look like:

This mount structure provides several benefits:

- It reduces the potential of hitting mount table limits.

- It reduces operational complexity by centralizing all static secrets under a single mount

Note

There is a limit to the number of secret engines you can mount. For integrated storage, the maximum number of secret engine mount points is approximately 14000. See Vault limits and maximums for more information.Configure the KV secrets engine

In this section, you will enable your first KV secrets engine and test out the API.

Similar to all other auth methods and secrets engines, you must enable the KV secrets engine before you can use it.

Login to your Vault cluster as an admin and use the command below to enable the KV v2 secrets engine using the CLI on a custom path under the org level namespace.

$ export VAULT_NAMESPACE=<org level namespace>

$ vault secrets enable -version=2 -path=secret kv

Success! Enabled the kv secrets engine at: secret/

Working with KV v2

Here we will briefly explore the functionalities of the KV v2 secrets engine. For a more thorough understanding, please review the tutorials and documentation below.

Writing data

$ vault kv put -mount=secret my-secret foo=a bar=b

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:57:04.098790466Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

Reading data

$ vault kv get -mount=secret my-secret

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:57:04.098790466Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

bar b

foo a

Write another version of secret

$ vault kv put -mount=secret my-secret foo=aa bar=bb

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:58:31.636294687Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 2

Reading the same secret now will return the latest version

$ vault kv get -mount=secret my-secret

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:58:31.636294687Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 2

=== Data ===

Key Value

--- -----

bar bb

foo aa

Read a previous version by specifying the -version flag

$ vault kv get -mount=secret -version=1 my-secret

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:57:04.098790466Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

bar b

foo a

Delete the latest version of a secret

$ vault kv delete -mount=secret my-secret

Success! Data deleted (if it existed) at: secret/data/my-secret

Undelete a specific version of a secret

$ vault kv undelete -mount=secret -versions=2 my-secret

Success! Data written to: secret/undelete/my-secret

$ vault kv get -mount=secret my-secret

==== Secret Path ====

secret/data/my-secret

======= Metadata =======

Key Value

--- -----

created_time 2023-09-20T18:58:31.636294687Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 2

=== Data ===

Key Value

--- -----

bar bb

foo aa

Permanently delete a specific version of a secret

$ vault kv destroy -mount=secret -versions=2 my-secret

Success! Data written to: secret/destroy/my-secret

Vault policies

At this point, you should have one human auth method (OIDC or LDAP) and one machine auth method (AWS or AppRole) enabled. You have also enabled the KV v2 secrets engine with some secrets populated. The next step is to configure policies to manage access to these secrets for authenticated clients.

Policies provide a declarative way to grant or forbid access to certain paths and operations in Vault. When an identity authenticates to Vault it receives a token, and all policies associated with that identity are attached to that token.

Vault static secrets are organized and accessed using paths, and it is the role of ACL policies to describe the permissions on these paths. For example:

- A secret object is created at

secrets/billing-service - A policy named “billing-service-read” is created where ‘read' capabilities are defined against the above path

- The identity group “billing-service-dev” is mapped to the “billing-service-read” policy in the OIDC auth method.

- As a member of the “billing-service-dev” group, Bob authenticates with Vault using OIDC, and the token Bob receives has the “billing-service-read” policy attached

- Bob is allowed to read the secret at

secrets/billing-service

A thorough exploration of Vault policies can be found in the following documents:

There are some best practices that you should keep in mind when writing Vault policies.

Principle of least privilege

Err on the side of caution if a consumer is uncertain or lacks clarity regarding their access and policy requirements. If a client realizes the need for access to a secret at a later point, it can be identified and addressed accordingly. In contrast, granting access to an unnecessary secret can be challenging to detect and carries potential risks.

Role-based policies

It is often helpful to create several core policies based on functional roles when onboarding users to Vault. For example:

- Vault Cluster Administrator - full access to Vault

- Vault Operator - responsible for general Vault operations such as configuring auth methods, secrets engines, and policies

- Security Team - consume audit logs, review and approve policies changes

- Developer - read-only access to specific paths required by their applications

- Application Owners - write access to specific paths for their applications

In addition to role-based policies for Vault users, organizations often have numerous policies tailored for application integrations. Each application may have distinct requirements that warrants the creation of individual policies. Moreover, multiple policies may be crafted for various stages in the software development lifecycle (e.g., development, quality assurance, staging, production) for each application. Furthermore, automation workflows, such as CI/CD pipelines or other application build tools, may also require specific policies.

Policy templates

Being as restrictive as possible in your policies can lead to a large number of policies to manage. To simplify this process, we recommend the use of ACL Policy Path Templating.

Policy templating allows for a much smaller set of policies to be used, but still provide the fine grained access controls needed.

Version control

We recommend that you put your policies in a version control system such as GitHub and have a peer-review process for both security and audit purposes. Change management and standardization becomes much easier when policies are centrally managed in a code repository. Vault operators or application teams can submit changes using pull requests and Vault administrators or security teams can approve or deny policy changes.

We recommend using Terraform to codify the configuration of Vault, including policy management. Refer to the Codify Management of Vault Enterprise tutorial which demonstrates policy deployment in multiple namespaces.

Configure policies for KV Secrets

In this section, you will create policies for a hypothetical application to:

- Allow the application owners to create and update static secrets

- Allow the application developers to read static secrets for their application

- Allow the application to read its required static secrets

Before you begin, you should have:

- Configured either the OIDC or LDAP auth method for human access

- Configured either the AWS or AppRole auth method for machine access

- Enabled the KV v2 secrets engine

Step 1: Write a secret

Login with an admin user and write a secret for the hypothetical application (billing-service).

$ vault kv put -mount=secret billing-service foo=bar

== Secret Path ==

secret/data/billing-service

======= Metadata =======

Key Value

--- -----

created_time 2023-09-21T18:27:29.267845823Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

Step 2: Create policy for application owners

$ vault policy write billing-service-owner -<<EOF

path "secret/data/billing-service" {

capabilities = ["create", "read", "update", "delete"]

}

EOF

Success! Uploaded policy: billing-service-owner

Step 3: Create policy for the application and its developers

$ vault policy write billing-service-dev -<<EOF

path "secret/data/billing-service" {

capabilities = ["read"]

}

EOF

Success! Uploaded policy: billing-service-dev

Step 4: Associate policy for human auth method

For OIDC auth:

Similar to the configuration for Vault admins in setting up the OIDC auth method, you can use the commands below to create an external group called “billing-service-owner” for the application owners and associate it to the “billing-service-owner” policy. This external group is tied to an OIDC group called “billing-service-owner” by the group alias.

$ GROUP_ID=$(vault write -format=json identity/group \

name="billing-service-owner" \

type="external" \

policies="billing-service-owner" | jq -r ".data.id")

$ MOUNT_ACCESSOR=$(vault read -field=accessor sys/mounts/auth/oidc)

$ vault write identity/group-alias \

name="billing-service-owner" \

mount_accessor=$MOUNT_ACCESSOR \

canonical_id=$GROUP_ID

Create another external group called “billing-service-dev” for the application developers.

$ GROUP_ID=$(vault write -format=json identity/group \

name="billing-service-dev" \

type="external" \

policies="billing-service-dev" | jq -r ".data.id")

$ MOUNT_ACCESSOR=$(vault read -field=accessor sys/mounts/auth/oidc)

$ vault write identity/group-alias \

name="billing-service-dev" \

mount_accessor=$MOUNT_ACCESSOR \

canonical_id=$GROUP_ID

For LDAP auth:

Map the LDAP group called “billing-service-owner” for the application owners to the “billing-service-owner” policy.

$ vault write auth/ldap/groups/billing-service-owner policies=billing-service-owner

Map the LDAP group called “billing-service-dev” for the application developers to the “billing-service-dev” policy.

$ vault write auth/ldap/groups/billing-service-dev policies=billing-service-dev

Step 5: Associate policy for machine auth method

For AWS auth:

Similar to the configuration when you set up a demo role for the AWS auth method, use the command below to create a new role called “billing-service-dev” for the application and associate it to the “billing-service-dev” policy.

$ vault write auth/aws/role/billing-service-dev auth_type=iam \

bound_iam_principal_arn=arn:aws:iam::123456789012:role/aws-ec2role-for-vault-autthmethod \

policies=billing-service-dev token_ttl=8 max_ttl=8

Success! Data written to: auth/aws/role/billing-service-dev

For AppRole auth:

Create a role called “billing-service-dev” for the application and map it to the “billing-service-dev” policy.

$ vault write auth/approle/role/billing-service-dev \

secret_id_num_uses=1 \

secret_id_ttl=15m \

token_policies="billing-service-dev" \

token_ttl=1h \

token_max_ttl=1h

Note

The application is responsible for renewing the Vault token once it authenticates using the Secret ID. Recall that limiting the SecretID TTL and its number of uses means that a new SecretID will need to be delivered to the application once the applications' Vault token reaches its maximum TTL and can no longer be renewed.Step 6: Validate access

For OIDC auth:

Login with a user belonging to the “billing-service-owner” group. You should see that the token is attached to the “billing-service-owner” identity policy.

$ export VAULT_NAMESPACE=<org level namespace>

$ vault login -method=oidc

Waiting for OIDC authentication to complete...

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token hvs.token

token_accessor 84HTrye0clS28Elc13ZDgiXo.mFAaM

token_duration 1h

token_renewable true

token_policies ["default"]

identity_policies ["billing-service-owner"]

policies ["default" "billing-service-owner"]

token_meta_email alice@domain.com

token_meta_role default

token_meta_username alice

Create a new secret.

vault kv put -mount=secret billing-service pizza=pepperoni

== Secret Path ==

secret/data/billing-service

======= Metadata =======

Key Value

--- -----

created_time 2023-09-21T20:18:44.084663057Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 2

Perform step 1 and 2 to validate access for application developers. You should only be able to read the secret under the path “secret/billing-service”.

For LDAP auth:

Login with a user belonging to the “billing-service-owner” group. You should see that the token is attached to the “billing-service-owner” identity policy.

vault login -method=ldap username=nwong

Password (will be hidden):

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token hvs.token

token_accessor EyhczVcVIT8BUuGU1r5GIJ16.JeR4H

token_duration 1h

token_renewable true

token_policies ["default" "billing-service-owner"]

identity_policies []

policies ["default" "billing-sevice-owner"]

token_meta_username nwong

Try to create a new secret.

vault kv put -mount=secret billing-service pizza=pepperoni

== Secret Path ==

secret/data/billing-service

======= Metadata =======

Key Value

--- -----

created_time 2023-09-21T20:18:44.084663057Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 2

Perform step 1 and 2 to validate access for application developers. You should only be able to read the secret under the path “secret/billing-service”.

For AWS auth:

Login from an EC2 instance attached to an instance profile that is bound to the IAM principal you configured for the billing-service-dev role. You should see that the token is attached to the “billing-service-dev” policy.

$ vault login -method=aws role=billing-service-dev

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token hvs.token

token_accessor cGAOT1f11td0tDCgR4Ff1Xw8.Ojft7

token_duration 8s

token_renewable true

token_policies ["default" "billing-service-dev"]

identity_policies []

policies ["default" "billing-service-dev"]

token_meta_client_arn arn:aws:sts::123456789012:assumed-role/aws-ec2role-for-vault-authmethod/i-02ddcf5aed1121703

token_meta_client_user_id AROATYM2SX6XH5UEKGF7G

token_meta_role_id 53f43687-2f55-c5e9-3570-c51b1cb69763

token_meta_account_id 123456789012

token_meta_auth_type iam

token_meta_canonical_arn arn:aws:iam::123456789012:role/aws-ec2role-for-vault-authmethod

Verify that you can read the secret under the “secret/billing-service”.

$ vault kv get -mount=secret billing-service

== Secret Path ==

secret/data/billing-service

======= Metadata =======

Key Value

--- -----

created_time 2023-09-21T21:38:43.532185535Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

foo bar

For AppRole auth:

Read the RoleID using the command below.

$ ROLE_ID=$(vault read -field=role_id auth/approle/role/billing-service-dev/role-id)

Next, generate a SecretID for the role.

$ SECRET_ID=$(vault write -force -field=secret_id auth/approle/role/billing-service-dev/secret-id)

Login using the “billing-service-dev” role.

$ vault write auth/approle/login role_id=$ROLE_ID secret_id=$SECRET_ID

Key Value

--- -----

token hvs.token

token_accessor JhFFIcLlqdjmM6Rq15v0DkGW

token_duration 768h

token_renewable true

token_policies ["default" "billing-service-dev"]

identity_policies []

policies ["default" "billing-service-dev"]

token_meta_role_name billing-service-dev

Verify that you can read the secret under the “secret/billing-service”.

$ vault kv get -mount=secret billing-service

== Secret Path ==

secret/data/billing-service

======= Metadata =======

Key Value

--- -----

created_time 2023-09-21T21:38:43.532185535Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

foo bar

Consumption of secrets

At this point, you should have one human auth method (OIDC or LDAP) and one machine auth method (AWS or AppRole) enabled, allowing both humans and machines to authenticate to Vault. You have also populated some static secrets in the KV v2 secrets engine and created policies to enforce permissions on which identities can access specific secrets. The next step is to consider how to deliver these secrets from Vault to the application.

Secret consumption patterns

There are two patterns for your applications to fetch secrets from Vault: direct API integration or via the Vault agent.

Direct API integration requires that your application make API calls to authenticate with Vault and access secrets. To do this, you could either write custom integration code or depend on a third party client library. Regardless of which option you choose, it requires that your application be “Vault-aware”, meaning that you need to write and maintain application code to enable your application to communicate with Vault. For some applications, this is not a problem and may actually be the preferred method. For example, if you have only a handful of applications or you want to keep strict, customized control over the way each application interacts with Vault, you might be fine with the added overhead of maintaining that code and making sure a member of each application's team is trained to understand Vault and that code.

In other situations — typically in large enterprises — updating each application's code base could be a monumental task or simply a non-starter for several reasons:

- If your organization has hundreds or thousands of applications, you may not have the time, resources, or expertise to update and maintain Vault integration code in every application.

- Your organization may not permit the teams deploying some applications to add the Vault integration code, or any code. For example, certain legacy applications may be too brittle to allow the addition of Vault integration code.

- The applications (secrets consumers) and the systems (secrets originators) are, in many cases, managed by different teams. This makes coordinating the maintenance of Vault integration code into a clean workflow very difficult.

- Some teams deploy third-party applications that are not owned by the organization, and therefore it's not possible to add Vault integration code.

For those situations, we recommend a much more scalable and simpler experience — using the Vault Agent or Vault Proxy. Vault Agent can obtain secrets and provide them to applications, and Vault Proxy can act as a proxy between Vault and the application, optionally simplifying the authentication process and caching requests.

Note

Vault Proxy is a new feature introduced in Vault 1.14. Vault Agent has the capability to act as an API Proxy for Vault but this feature will be deprecated in future release. See this table comparing the capabilities between Vault Agent and Vault Proxy.Vault Agent

We briefly introduced Vault Agent earlier in this document when we discussed how Vault solves the “secret zero” problem. Vault agent is a client daemon that uses the same Vault binary as the Vault server, except that it is run with the vault agent command. Here we will explore the features offered by the Vault Agent.

- Auto-Auth - Automatically authenticate to Vault and manage the token renewal process.

- Templating - Allows rendering of user-supplied templates by Vault Agent, using the token generated by the Auto-Auth step.

- Caching - Allows client-side caching of responses containing newly created tokens and responses containing leased secrets generated off of these newly created tokens. The agent also manages the renewals of the cached tokens and leases.

- Process Supervisor Mode - Runs a child process with Vault secrets injected as environment variables.

- Windows Service - Allows running the Vault Agent as a Windows service.

Auto-Auth

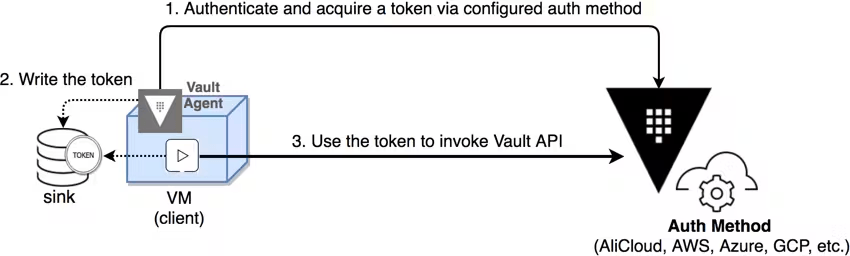

Vault is built on zero trust principles, so applications that need to fetch secrets from Vault are required to make authenticated requests by providing a Vault token. Vault Agent can make the authentication process for obtaining a Vault token easy and transparent to applications.

When Vault Agent starts up, it automatically authenticates with Vault and receives a Vault token via a process known as auto-auth. The token can then be written to disk to any number of file sinks - a file sink is a local file or a file mapped via some other process (NFS, Gluster, CIFs, etc.). In addition to file permissions, the token can be protected through Vault's response-wrapping mechanism and encryption. Applications can then use this token to request secrets from Vault.

Vault Agent also manages the lifecycle of the Vault token that is received from auto-auth. This includes timely renewal of the tokens and re-authentication if a token can no longer be renewed. The sink is updated with the new token value whenever re-authentication occurs.

The client can simply retrieve the token from the sink and connect to Vault using the token. This simplifies client integration since the Vault agent handles the login and token refresh logic.

To leverage this feature, run the Vault binary in agent mode (vault agent -config=<config_file>) on the client. The agent configuration file must specify the auth method and sink locations that dictate where the token should be written on the filesystem.

Reference Materials:

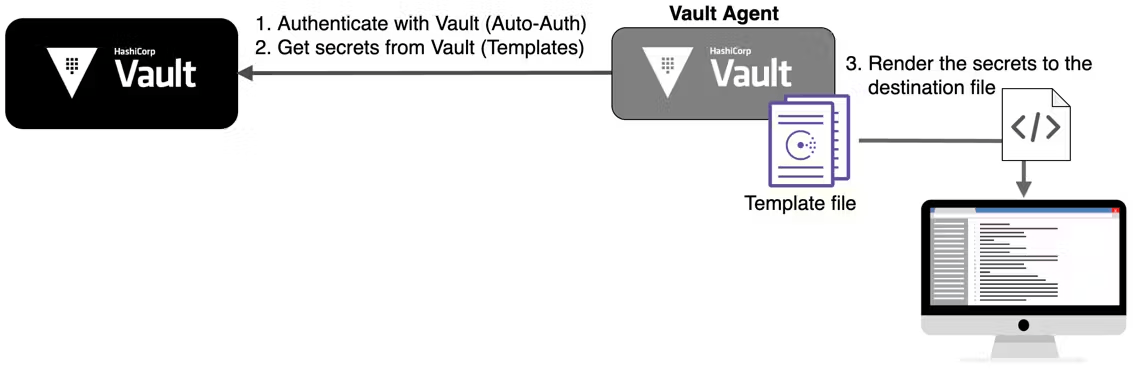

Templating

Vault Agent supports the ability to render static and dynamic secrets from Vault by using templates that describe how and where to render those secrets on the local system. Applications or service owners define these templates using Consul Template markup and then the secrets are consumed easily by the applications, eliminating the need for additional formatting and parsing. A common use case for templating is formatting credentials from Vault's database secrets engine to be rendered as database connection strings for applications to consume directly. An example of this approach is demonstrated in the tutorial here.

After the Vault Agent starts up and authenticates with Vault, it can be set up to retrieve secrets needed for an application. It writes these secrets to disk through the templates defined in the agent configuration, which the application can then use as needed.

Reference Materials:

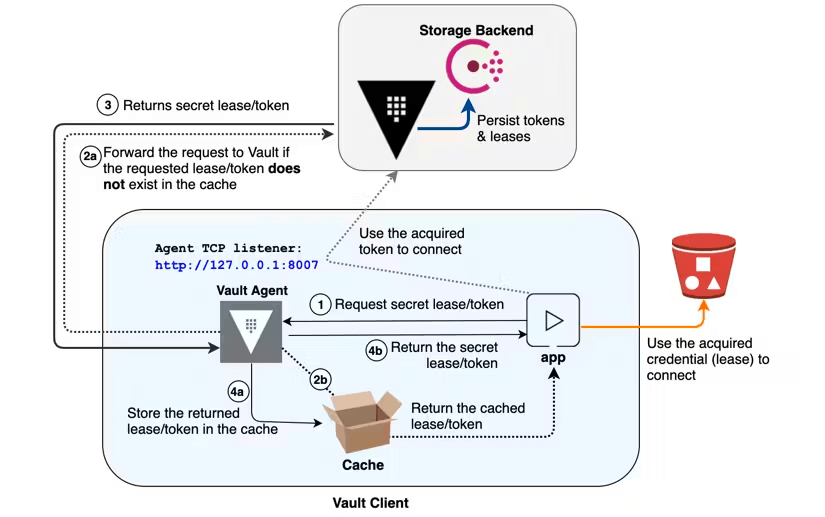

Caching

When Vault is controlling access to all of your secrets, it is very important to ensure that Vault's performance scales as consumption grows, so it can handle the corresponding load. The Vault Agent supports the ability to proxy and cache requests to Vault as well as responses from Vault. This helps reduce the number of requests sent to Vault servers at one time, reducing the peak load on Vault servers.

Reference Materials:

Process supervisor mode

Vault Agent's Process Supervisor Mode allows secrets to be injected into a process via environment variables using Consul Template markup.

It is common for applications to load certain configurations from environment variables. When the application starts up, it reads environment variables and uses them to initialize connections to various databases, message queues, or other services. Often, these initialization parameters contain secrets such as database credentials, certificates, and API keys.

In this mode, Vault Agent will start the application as a child process with the environment variables populated using the configured secrets. The Vault Agent will act as a process supervisor to the application and will restart it when the configured static secrets change.

Reference Materials:

- Vault agent's process supervisor mode documentation

- Vault Agent - secrets as environment variables tutorial

Windows service

Vault Agent can be run as a Windows service. In order to do this, Vault Agent must be registered with the Windows Service Control Manager. After Vault Agent is registered, it can be started like any other Windows service.

Reference Materials:

Vault proxy

The Vault Proxy offers an alternative integration method for Vault clients. It is designed to simplify the integration workflow for clients that need to make direct API calls to Vault.

The Vault Proxy acts as an API proxy for Vault. Any requests sent to the Vault Proxy will be forwarded to the Vault server, and these requests can be configured to optionally allow or force the automatic use of the auto-auth token, simplifying the authentication process. It also allows client-side caching of responses to reduce the load to the Vault server.

Unlike Vault Agent templating, clients must be able to integrate with Vault's APIs in order to use Vault Proxy. See this table for a comparison of the capabilities between Vault Agent and Vault Proxy.

Reference Materials:

The Vault Agent advantage

The Vault Agent simplifies the zero trust adoption journey by reducing the effort and time needed by your application teams to integrate with Vault. Applications can easily integrate with the agent, which handles authentication into Vault along with the fetching, rendering, and lifecycle management of application secrets and the caching of these secrets to reduce the load on Vault.

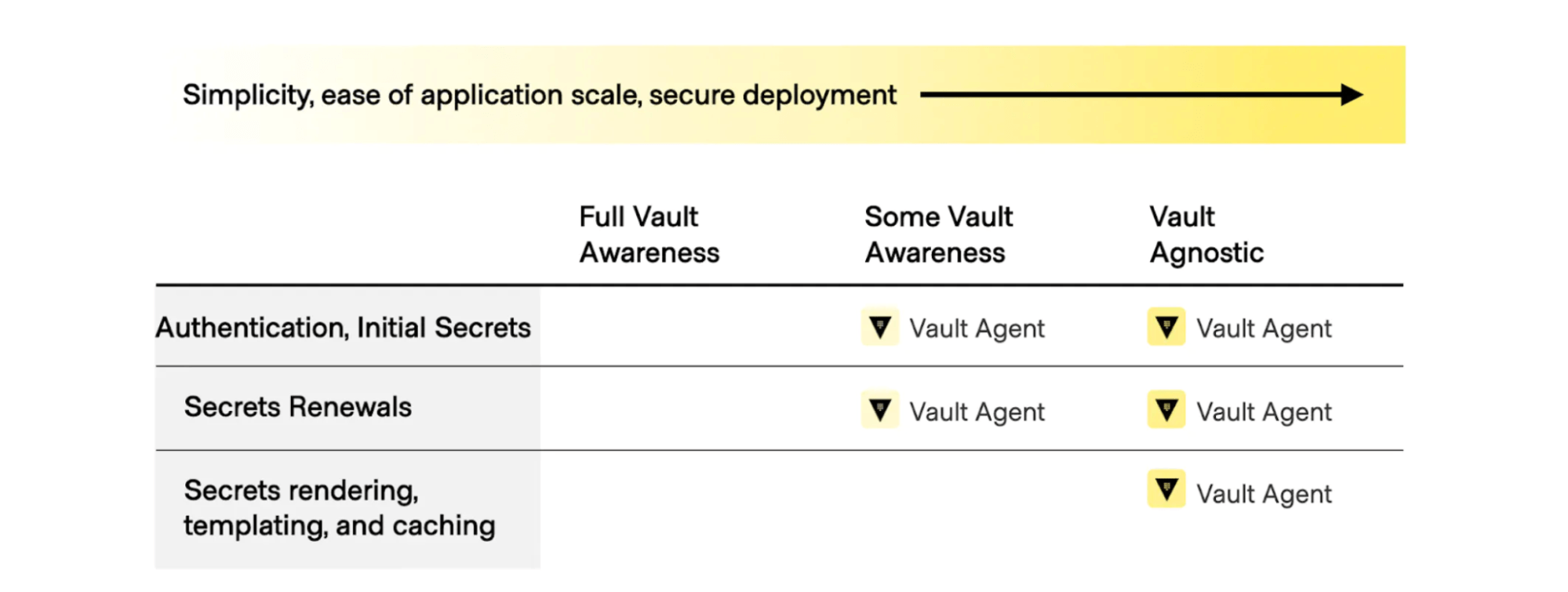

The diagram below shows that as tasks such as fetching the initial secrets, secrets renewal, secrets rendering, templating, and caching become more Vault agnostic from the perspective of your application, they become easier to manage at scale:

Vault Agent best practices

Deployment pattern

For each application that needs access to Vault, we recommend deploying one agent per application. This means that each application has its own login and identity with Vault, which aligns with the practice of least privilege. When using machine identity authentication (e.g., AWS, GCP, Azure), you should deploy a Vault agent for each host. If multiple applications share a host, use separate agent instances for each application with an auth method like AppRole that is not tied to the machine's identity.

Telemetry

The agent supports and collects various runtime metrics about its performance, the auto-auth and the cache status. We recommend that you enable telemetry and send these metrics to a monitoring platform. See the list of available metrics here.

Integration pattern

How you actually integrate Vault Agent into your application deployment workflow will vary based on several factors. Some questions to ask to help decide appropriate usage:

- What is the lifecycle of my application? Is it more ephemeral or long-lived?

- What are the lifecycles of my authentication tokens? Are they long-lived and do they require continual renewal to show liveliness of a service or do you want to enforce periodic re-authentications?

- Do I have a group of applications running on my host which each need their own token? Can I use a native authentication capability (e.g. AWS IAM, K8s, Azure MSI, Google Cloud IAM, etc.)?

The answers to these questions will help you decide if Vault Agent should run as a daemon or as a prerequisite of a service configuration. Take for example the following Systemd service definition for running Nomad:

[Unit]

Description=Nomad Agent

Requires=consul-online.target

After=consul-online.target

[Service]

KillMode=process

KillSignal=SIGINT

Environment=VAULT_ADDR=http://active.vault.service.consul:8200

Environment=VAULT_SKIP_VERIFY=true

ExecStartPre=/usr/local/bin/vault agent -config /etc/vault-agent.d/vault-agent.hcl

ExecStart=/usr/bin/nomad-vault.sh

ExecReload=/bin/kill -HUP $MAINPID

Restart=on-failure

RestartSec=2

StartLimitBurst=3

StartLimitIntervalSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Notice the ExecStartPre directive that runs Vault Agent before the desired service starts. Here, we want to run the Vault agent as a prerequisite before Nomad starts up. To prevent Vault agent from running as a daemon, you can enable the exit_after_auth flag to instruct the agent to exit after a single successful authentication and all templates rendered.

The service startup script expects a Vault token value set as shown in the /usr/bin/nomad-vault.sh startup script:

#!/usr/bin/env bash

if [ -f /mnt/ramdisk/token ]; then

exec env VAULT_TOKEN=$(vault unwrap -field=token $(jq -r '.token' /mnt/ramdisk/token)) \

/usr/local/bin/nomad agent \

-config=/etc/nomad.d \

-vault-tls-skip-verify=true

else

echo "Nomad service failed due to missing Vault token"

exit 1

fi

Applications which expect Vault tokens typically look for a VAULT_TOKEN environment variable. Here, you're using Vault Agent auto-auth to get a token and write it out to a RAM disk and as part of the Nomad startup script. You read the response-wrapped token from the RAM disk, and save it to your VAULT_TOKEN environment variable before starting Nomad.

Similarly, you can use Vault Agent templating to generate application configuration files as part of your application startup script. This would enable your application to be completely agnostic from Vault. For example, the template below renders the application settings for a .NET Core application. It contains most of the default configuration for an ASP.NET Core application but creates a ConnectionString based on the database username and password from Vault using the database secrets engine.

{

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft": "Warning",

"Microsoft.Hosting.Lifetime": "Information"

}

},

"AllowedHosts": "*",

"ConnectionStrings": {

{{- with secret "projects-api/database/creds/projects-api-role" }}

"Database": "Server=.;Database=HashiCorp;user id={{ .Data.username }};password={{ .Data.password }}"

{{- end }}

}

}

Vault Agent will update the application settings each time Vault rotates the database username and password. ASP.NET Core allows live reload of the application each time the application settings file changes. This makes your database credentials to be short-lived and significantly reduces its vulnerability. If your application does not support live reload of application configurations, you can utilize the exec command to execute a command to restart the application each time the template changes. For more details on how to use the Vault agent to generate application configurations, see the Vault Agent with .NET Core tutorial.

Configure the Vault Agent

In this section, you will configure the Vault Agent to consume secrets from the KV v2 secrets engine.

The content in this section assumes that you have completed the configurations in the previous sections. Before you begin, you should have:

- Configured either the OIDC or LDAP auth method for human access

- Configured either the AWS or AppRole auth method for machine access

- Enabled the KV v2 secrets engine and populated it with some secrets

- Configured policies to allow applications to read its required static secrets

Vault Agent and AWS auth

Before you begin, ensure that you have configured the AWS auth method and tested that you can log in successfully from an EC2 instance.

Step 1: Create the Vault Agent configuration file

Log in to your EC2 instance and create a Vault Agent configuration called “vault-agent-template.hcl” using the example below.

The auto_auth block uses the aws auth method enabled at the auth/aws path on the Vault server. The namespace value specifies where your aws auth method is mounted, replace it with the name of your org level namespace. The Vault Agent will use the billing-service-dev role to authenticate.

The template block configures Vault agent templating. The content parameter embeds the content of a consul-template directly into the configuration file rather than supplying the source path to the template file. The destination parameter tells the agent where the output will be written to. See a configuration example here where the source path is provided for the template file.

Finally, the vault block configures how the Agent connects to the remote Vault server.

pid_file = "./pidfile"

auto_auth {

method "aws" {

namespace = "<org level namespace>"

mount_path = "auth/aws"

config = {

type = "iam"

role = "billing-service-dev"

}

}

}

template {

contents = "{{ with secret \"secret/billing-service\" }}{{ .Data.data.foo }}{{ end }}"

destination = "/home/vault-user/render-content.txt"

}

vault {

address = "https://dev.vault.org:8200"

ca_cert = "/opt/vault/tls/ca.pem"

}

Step 2: Start the Vault Agent

Execute the following command to start the agent.

$ vault agent -config=./vault-agent-template.hcl

You should see the following:

2023-10-17T19:31:51.080Z [INFO] agent.exec.server: starting exec server

2023-10-17T19:31:51.081Z [INFO] agent.exec.server: no env templates or exec config, exiting

2023-10-17T19:31:51.082Z [INFO] agent.auth.handler: starting auth handler

2023-10-17T19:31:51.082Z [INFO] agent.auth.handler: authenticating

2023-10-17T19:31:51.084Z [INFO] agent.sink.server: starting sink server

2023-10-17T19:31:51.085Z [INFO] agent.template.server: starting template server

2023-10-17T19:31:51.085Z [INFO] (runner) creating new runner (dry: false, once: false)

2023-10-17T19:31:51.086Z [INFO] (runner) creating watcher

2023-10-17T19:31:51.138Z [INFO] agent.auth.handler: authentication successful, sending token to sinks

2023-10-17T19:31:51.139Z [INFO] agent.auth.handler: starting renewal process

2023-10-17T19:31:51.140Z [INFO] agent.template.server: template server received new token

2023-10-17T19:31:51.140Z [INFO] (runner) stopping

2023-10-17T19:31:51.140Z [INFO] (runner) creating new runner (dry: false, once: false)

2023-10-17T19:31:51.140Z [INFO] (runner) creating watcher

2023-10-17T19:31:51.143Z [INFO] (runner) starting

2023-10-17T19:31:51.152Z [INFO] agent.auth.handler: renewed auth token

2023-10-17T19:31:51.175Z [INFO] (runner) rendered "(dynamic)" => "/home/ssm-user/render-content.txt"

^C==> Vault Agent shutdown triggered

2023-10-17T19:31:58.456Z [INFO] agent.exec.server: exec server stopped

2023-10-17T19:31:58.456Z [INFO] (runner) stopping

2023-10-17T19:31:58.456Z [INFO] agent.template.server: template server stopped

2023-10-17T19:31:58.456Z [INFO] agent.auth.handler: shutdown triggered, stopping lifetime watcher

2023-10-17T19:31:58.456Z [INFO] agent.auth.handler: auth handler stopped

2023-10-17T19:31:58.456Z [INFO] agent.sink.server: sink server stopped

2023-10-17T19:31:58.456Z [INFO] agent: sinks finished, exiting

Now navigate to the directory where you have configured the agent to output the templated secrets. You should see that the content of the file contains the value of the secret.

$ cat render-content.txt

bar

Step 3: Render secrets as environment variables

Another way to provide secrets to your application is to inject them as environment variables. Vault Agent's process supervisor mode allows Vault secrets to be injected into a process via environment variables using Consul Template markup.

Create an agent configuration called “vault-agent-envar.hcl” using the example below.

pid_file = "./pidfile"

auto_auth {

method "aws" {

namespace = "<org level namespace>"

mount_path = "auth/aws"

config = {

type = "iam"

role = "billing-service-dev"

}

}

}

template_config {

static_secret_render_interval = "1m"

exit_on_retry_failure = true

}

vault {

address = "https://dev.vault.org:8200"

ca_cert = "/opt/vault/tls/ca.pem"

}

env_template "MY_SECRET" {

contents = "{{ with secret \"secret/data/billing-service\" }}{{ .Data.data.foo }}{{ end }}"

error_on_missing_key = true

}

exec {

command = ["./kv-demo.sh"]

restart_on_secret_changes = "always"

restart_stop_signal = "SIGTERM"

}

The template_config block has configuration entries that affect all templates. The static_secret_render_interval entry configures how often Vault Agent Template should render non-lease secrets such as KV v2. Here we are telling the Agent to re-render the template every minute. The exit_on_retry_failure entry configures Vault Agent to exit after it has exhausted its number of template retry attempts due to failures. The number of retries can be configured using the retry stanza, but the default number of retries is 12.

The env_template block maps the template specified in the contents to the environment variable name in the title of the block. In the example, we are injecting an environment variable called MY_SECRET with the value of the secret foo at secret/data/myapp. The error_on_missing_key configuration tells the Agent to exit with an error if the secret key being accessed does not exist.

The exec block configures how the child process is started. The command entry specifies the command to start the child process. restart_on_secret_changes controls whether the agent will restart the child process on secret changes. For example, if the static secret is updated (on static_secret_render_interval), then the agent will restart the child process. The restart_stop_signal is the signal that the agent will send to the child process when a secret has been updated and the process needs to be restarted. The process has 30 seconds after this signal is sent until SIGKILL is sent to force the child process to stop.

Create a simple shell script called kv-demo.sh which will display the value of the environment variable named MY_SECRET.

#!/bin/sh

echo

echo "==== DEMO APP ===="

echo "MY_SECRET = ${MY_SECRET}"

sleep 100

Step 4: Start the Vault Agent with new configuration

Start the agent by executing the following command.

$ vault agent -config=./vault-agent-envar.hcl

You should see the following:

2023-10-17T19:35:45.423Z [INFO] agent.exec.server: starting exec server

2023-10-17T19:35:45.423Z [INFO] (runner) creating new runner (dry: true, once: false)

2023-10-17T19:35:45.424Z [INFO] (runner) creating watcher

2023-10-17T19:35:45.424Z [INFO] agent.auth.handler: starting auth handler

2023-10-17T19:35:45.424Z [INFO] agent.auth.handler: authenticating

2023-10-17T19:35:45.424Z [INFO] agent.sink.server: starting sink server

2023-10-17T19:35:45.424Z [INFO] agent.template.server: starting template server

2023-10-17T19:35:45.424Z [INFO] agent.template.server: no templates found

2023-10-17T19:35:45.469Z [INFO] agent.auth.handler: authentication successful, sending token to sinks

2023-10-17T19:35:45.469Z [INFO] agent.auth.handler: starting renewal process

2023-10-17T19:35:45.470Z [INFO] agent.exec.server: exec server received new token

2023-10-17T19:35:45.470Z [INFO] (runner) stopping

2023-10-17T19:35:45.470Z [INFO] (runner) creating new runner (dry: true, once: false)

2023-10-17T19:35:45.470Z [INFO] (runner) creating watcher

2023-10-17T19:35:45.470Z [INFO] (runner) starting

2023-10-17T19:35:45.480Z [INFO] agent.auth.handler: renewed auth token

2023-10-17T19:35:45.494Z [INFO] (runner) rendered "(dynamic)" => "MY_SECRET"

2023-10-17T19:35:47.495Z [INFO] agent.exec.server: [INFO] (child) spawning: ./kv-demo.sh

==== DEMO APP ====

MY_SECRET = bar

Now change the value of the static secret in Vault. The agent will re-render the secret every minute, and if the value of the secret changes, it will restart the child process and the updated secret value will be shown.

2023-09-26T19:32:36.727Z [INFO] (runner) rendered "(dynamic)" => "MY_SECRET"

2023-09-26T19:32:38.727Z [INFO] agent.exec.server: stopping process: process_id=118363

2023-09-26T19:32:38.728Z [INFO] agent.exec.server: [INFO] (child) stopping process

2023-09-26T19:32:38.728Z [INFO] agent.exec.server: [INFO] (child) spawning: ./kv-demo.sh

==== DEMO APP ====

MY_SECRET = bazzzz

Vault Agent and AppRole auth

Before you begin, ensure that you have configured the AppRole auth method and tested that you can login successfully.

Step 1: Create the Vault Agent configuration file

Login to the VM where the application is deployed and create a Vault Agent configuration called “vault-agent-template.hcl” using the example below.

The auto_auth block uses the approle auth method enabled at the auth/approle path on the Vault server. The namespace value specifies where your approle auth method is mounted, replace it with the name of your org level namespace. The role_id_file_path is the path to the file with the Role ID and the secret_id_file_path is the path to the file with the Secret ID. By default, the Vault agent will delete the Secret ID file after it is read. The secret_id_response_wrapping_path tells the Agent that the Secret ID is response-wrapped and should be verified against the response wrapping path set here. Using a response-wrapped Secret ID is recommended as discussed in the AppRole Best Practice section.

The template block configures Vault agent templating. The source parameter specifies the source path to the template file. The destination parameter tells the agent where the output will be written to.

Finally, the vault block configures how the Agent connects to the remote Vault server.

pid_file = "./pidfile"

auto_auth {

method {

type = "approle"

namespace = "demo"

config = {

role_id_file_path = "/home/vault/app1_role_id"

secret_id_file_path = "/home/vault/app1_secret_id"

secret_id_response_wrapping_path = "auth/approle/role/billing-service-dev/secret-id"

}

}

}

template {

source = "/home/vault/kv.tpl"

destination = "/home/vault/kv.html"

}

vault {

address = "https://dev.vault.org:8200"

ca_cert = "/opt/vault/tls/ca.pem"

}

Step 2: Create the template file

Create the template file referenced in the agent configuration. The example below creates an HTML file by fetching the secret foo from the path secret/billing-service. You can find more information on the consul-templating language here.

tee /home/vault/kv.tpl <<EOF

{{ with secret "secret/billing-service" }}

<html>

<head>

</head>

<body>

<li><strong>foo</strong> : {{ .Data.data.foo }}</li>

</body>

</html>

{{ end }}

EOF

Step 3: Start the Vault Agent

Start the agent by executing the following command.

$ vault agent -config=./vault-agent-template.hcl

You should see the following:

2023-10-17T20:51:51.119Z [INFO] agent.exec.server: starting exec server

2023-10-17T20:51:51.119Z [INFO] agent.exec.server: no env templates or exec config, exiting

2023-10-17T20:51:51.119Z [INFO] agent.auth.handler: starting auth handler

2023-10-17T20:51:51.119Z [INFO] agent.auth.handler: authenticating

2023-10-17T20:51:51.120Z [INFO] agent.sink.server: starting sink server

2023-10-17T20:51:51.120Z [INFO] agent.template.server: starting template server

2023-10-17T20:51:51.120Z [INFO] (runner) creating new runner (dry: false, once: false)

2023-10-17T20:51:51.122Z [INFO] (runner) creating watcher

2023-10-17T20:51:51.189Z [INFO] agent.auth.handler: authentication successful, sending token to sinks

2023-10-17T20:51:51.189Z [INFO] agent.auth.handler: starting renewal process

2023-10-17T20:51:51.194Z [INFO] agent.template.server: template server received new token

2023-10-17T20:51:51.194Z [INFO] (runner) stopping

2023-10-17T20:51:51.198Z [INFO] (runner) creating new runner (dry: false, once: false)

2023-10-17T20:51:51.199Z [INFO] (runner) creating watcher

2023-10-17T20:51:51.203Z [INFO] (runner) starting

2023-10-17T20:51:51.211Z [INFO] agent.auth.handler: renewed auth token

2023-10-17T20:51:51.237Z [INFO] (runner) rendered "/vault-agent/kv.tpl" => "/vault-agent/kv.html"

Now navigate to the directory where you have configured the agent to output the templated secrets. You should see that the content of the file contains the value of the secret.

$ cat kv.html

<html>

<head>

</head>

<body>

<li><strong>foo</strong> : bar</li>

</body>

</html>

Summary

In this section, we covered how Vault stores static secrets and reviewed the best practices for organizing static secrets. We recommend that you mount a single KV v2 secrets engine with sub-paths per application concern. This reduces operational complexity by centralizing all static secrets under a single mount and avoids the potential of hitting mount table limits. Next, we discussed the best practices for creating and managing Vault policies. We recommend that you adhere to the principle of least privilege when writing policies and use a version control system to manage your policies. Finally, we discussed the patterns for secret consumption and how Vault Agent can help to automate this workflow and enable your applications to remain Vault agnostic. At this point, you should have a well defined workflow for your application owners to create static secrets in Vault and for your applications to consume these static secrets.