Configuring for first use

This section will show you how to configure HCP Terraform (or Enterprise) to meet your requirements for delivering IaC services to your internal customers.

Foundational organization concepts

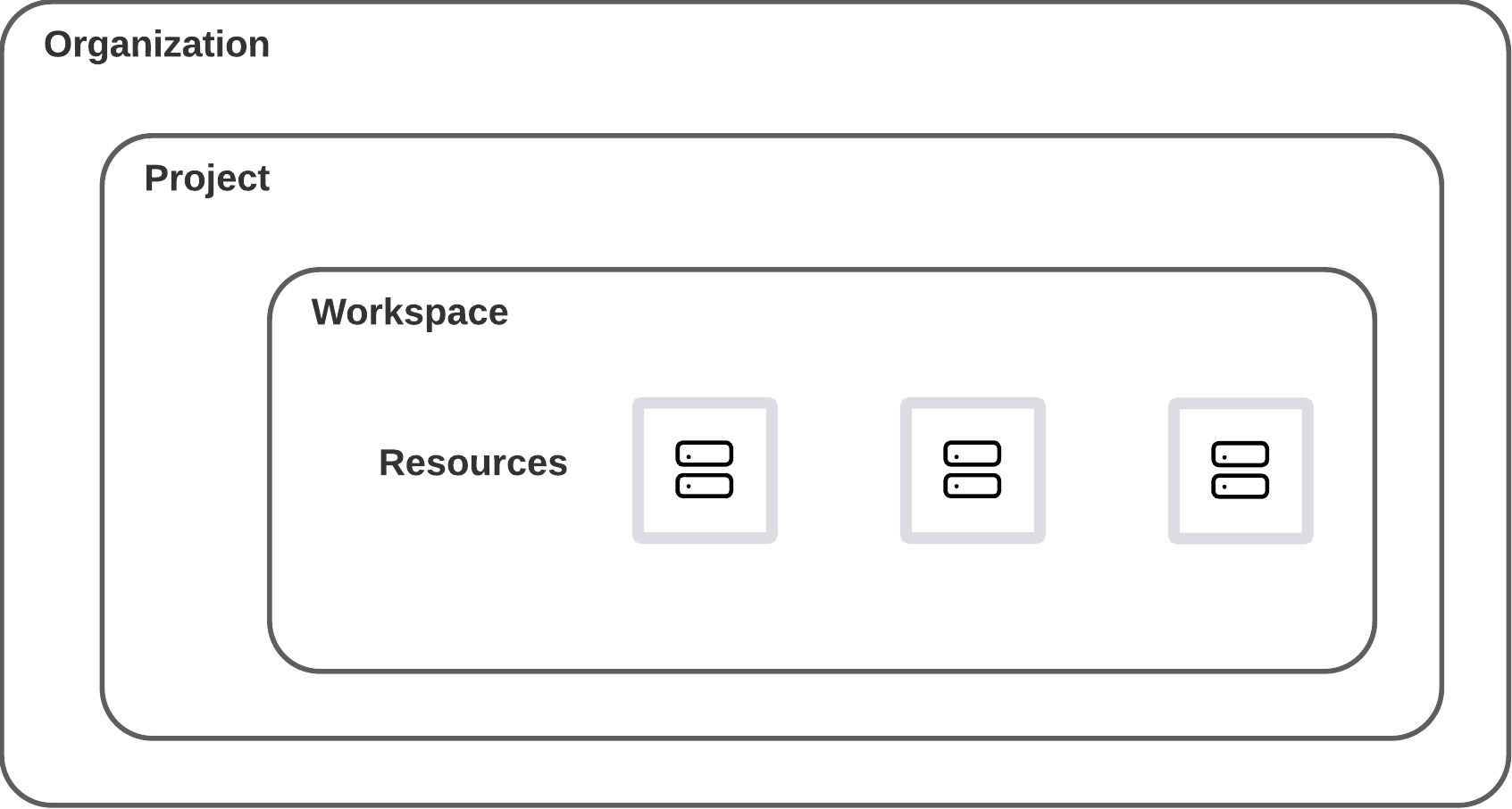

HCP Terraform helps you organize the infrastructure resources you want to manage with Terraform. It begins with the organization concept, which usually corresponds to your entire organization. You can have one or more Projects under the Organization. Each project can then manage one or more workspaces. Workspaces are the containers to manage a set of related infrastructure resources.

Access management

Access management in HCP Terraform is based on three components:

- User accounts

- Teams

- Permissions

A user account represents a user of HCP Terraform and is used to authenticate, authorize, personalize, and track user activities within HCP Terraform. A team is a container used to group related users together and helps to assign access rights easily. A user can be a member of one or more teams. Finally, permissions are the specific rights and privileges granted to groups of users to perform certain actions or access particular resources within HCP Terraform.

When managing your HCP Terraform environment, it is recommended to implement SAML/SSO for user management, in conjunction with RBAC. SAML/SSO provides a centralized and secure approach to user authentication, while RBAC allows you to define and manage granular access controls based on roles and permissions. By combining SAML/SSO and RBAC, you can streamline user onboarding and offboarding processes, enforce strong authentication measures, and ensure that users have the appropriate level of access to resources within your Terraform environment. This integrated approach enhances security, simplifies user management, and provides a seamless experience for you and your team members.

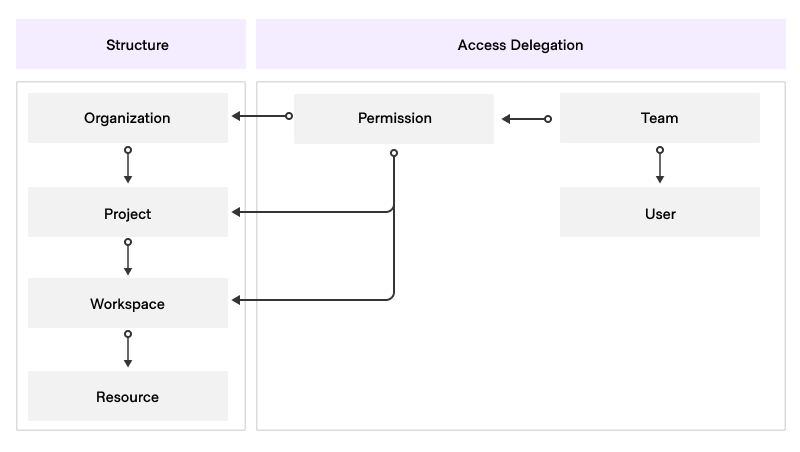

Delegate access

In HCP Terraform it is possible to assign team permissions at the following levels:

- Organization

- Project

- Workspace

The level at which a permission is set defines the scope of this permission. For example, a permission assigned at the:

- workspace level applies only to that workspace and not to any other workspace.

- project level applies to the project and the workspaces it manages, but not to workspaces managed by another project.

- organization level applies everywhere in that organization.

A word on naming conventions

As you configure your HCP Terraform instance, you will need to create and name a number of entities: teams, projects, workspaces, variable sets, etc. We recommend that you create and maintain a naming convention document which should include the naming conventions for each entity, as well as any other conventions you decide to use such as prefixes or suffixes. This document will help ensure that your Terraform experience is well-organized and easy to navigate.

Organizations, projects and workspaces

Organizations

The organization in Terraform Enterprise serves as the highest-level construct, encompassing various components and configurations. These include the following:

- Access management

- Single sign-on

- Users and teams

- Organization API tokens

- Projects and workspaces

- SSH keys

- Private registry

- VCS providers

- Variable sets

- Policies

- Agent pools

We recommend centralizing core provisioning work within a single organization in HCP Terraform to ensure efficient provisioning and management.

This approach is compatible with a multi-tenant environment where you have to support multiple business units (BUs) within the same organization. Our robust role-based access control system combined with projects ensures that each BU has its own set of permissions and access levels. Projects enable you to divide workspaces or teams within the organization. We will discuss them in more detail in the following section.

Terraform Enterprise maintains a run queue via a workload concurrency, and a worker RAM allocation. During development of the service, the Platform Team should analyzse these:

- The default concurrency is ten, meaning Terraform Enterprise will spawn a maximum of ten worker containers and thus will process 10 workspace runs simultaneously.

- The system will allocate 512Mb of RAM to each container. For most workloads, this will likely be sufficient, but the Platform Team should be aware that for workloads requiring RAM residency larger than this, the container daemon will harvest the container if it runs out of memory (OOM) and provide an error to the user. Proactive resource monitoring and reporting is thus key.

- The default RAM requirements for workspace processing is thus 5120Mb. Vertical scaling of the compute nodes may be required if larger RAM requirements are necessary, but we recommend that customers instead make use of HCP Terraform agents to offload the processing of large workloads to a dedicated agent pool - HCP Terraform agents work for Terraform Enterprise. In this case, the affected workspaces should be switched from a remote execution type to an agent execution type.

- For active/active configurations, the concurrency is multiplied by the number of active nodes (HashiCorp support up to a maximum of five nodes in active/active setups).

- Similar consideration applies for CPU requirements but RAM considerations are a higher priority due to the way the Terraform graph is calculated versus the way the containment operates.

- Note also that in the default configuration, if the instance is processing ten concurrent workspace runs which are classed as non-production by your user base, subsequently submitted workspace runs considered as production workloads by your users will have to wait until concurrency becomes available. This is a key consideration for the Platform Team to manage.

- We recommend liaising with your HashiCorp Solutions Engineer as necessary.

Naming convention

We recommend the following naming convention for organizations:

<customer-name>-prod-org: This organization serves as the main organization which processes what the Platform Team considers as production workloads.<customer-name>-test-org: This organization is dedicated to integration testing and special proofs of concept conducted by the Platform Team.

Access management and permissions

This section extends public documentation on the subject of HCP Terraform RBAC.

The owners team

When an organization is established, an owners team is created automatically. It is a fundamental team, and because of its role cannot be deleted.

As a member of the owners team, you have comprehensive access to all aspects of the organization, including policies, projects, workspaces, VCS providers, teams, API tokens, authentication, SSH keys, and cost estimation. A number of team-related tasks are reserved for the owners team and cannot be delegated:

- Creating and deleting teams

- Managing organization-level permissions granted to teams

- Viewing the full list of teams (including teams marked as secret(opens in new tab)).

Additionally, you can generate an organization-level API token to manage teams, team membership, and workspaces. It is important to note that this API token does not have permission to perform plans and applies in workspaces. Before assigning a workspace to a team, you can use either a member of the organization owners group or the organization API token to carry out initial setup.

Organization-level permissions

Key organization-level RBAC permissions and their respective purposes are below.

- Manage policies: Allows the Platform Team to create, edit, read, list, and delete the organization's Sentinel policies. This permission also grants you access to read runs on all workspaces for policy enforcement purposes, which is necessary to set enforcement of policy sets(opens in new tab).

- Manage run tasks: Enables the Platform Team to create, edit, and delete run tasks within the organization.

- Manage policy overrides: Permits the Platform Team to override soft-mandatory policy checks. This permission also includes access to read runs on all workspaces for policy override purposes.

- Manage VCS settings: Allows the Platform Team to manage the VCS providers(opens in new tab) and SSH keys(opens in new tab) available within the organization.

- Manage private registry: Grants the Platform Team the ability to publish and delete providers and/or modules in the organization's private registry(opens in new tab). Normally, these permissions are only available to organization owners.

- Manage membership: Enables the Platform Team to invite users to the organization, remove users from the organization, and add or remove users from teams within the organization. This permission allows the Platform Team to view the list of users in the organization and their organization access. However, it does not provide the ability to create teams, modify team settings, or view secret teams.

As you review the permissions above, it becomes clear that many of them are not required for typical users. Therefore, we recommend granting these organization-wide permissions to a select group of your team members, reserving them for the most senior members on your team and restricting access for members of the development team. This approach helps prevent unintended modifications or disruptions to your organization's configuration.

It is crucial to treat the organization token as a secret and limit access to only privileged staff. Additionally, you have the flexibility to create additional teams within your organization and grant them specific organization-level permissions to suit the needs of the team and maintain proper access control.

Setting up organization-level access

Because of the broad elevated privileges that the owners team has, we recommend limiting membership to a very small group of trusted users (i.e. the HCP Terraform Platform Team), and create additional teams as needed to manage areas of the organization as necessary. For example, the Security Team may need to manage policies. We would generally recommend automating everything.

You may need to make adjustments to this model based on the breakdown of roles and responsibilities in your organization. If you do, update this matrix based on your context and make sure you update your HCP Terraform deployment documentation managed by the CCoE.

Projects

HCP Terraform projects(opens in new tab) are containers for one or more workspaces. You can attach a number of configuration elements at the project level, which are then automatically shared with all workspaces managed by this project:

- Team permissions

- Variable set(s)

- Policy set(s)

Because of the inheritance mechanism, projects are the main organization tool to delegate the configuration and management responsibilities in a multi-tenant setup. The two standard project-level permissions that are used to achieve this delegation are:

- Admin - provides full control over the project (including deleting the project).

- Maintain - provides full control of everything in the project, except the project itself.

Variations on the proposed delegation model are possible by creating your own custom permission sets and assigning those to the appropriate teams in your organization. The benefits of this are:

- Increased agility with workspace organization:

- Related workspaces can be added to projects to simplify and organize a team's workspace view.

- Teams can create and manage infrastructure in their designated project without requesting admin access at the organization level.

- Reduced risk with centralized control:

- Project permissions allow teams to have administrative access to a subset of workspaces.

- This helps application teams safely manage their workspaces without interfering with other teams' infrastructure and allow organization owners to maintain the principle of least privilege.

- Best efficiency with self-service:

- No-code(opens in new tab) provisioning is integrated with projects, which means teams with project-level admin permissions can provision no-code modules directly into their project without requiring organization-wide workspace management privileges.

Project design

When deploying HCP Terraform at scale, a key consideration is the effective organization of your projects to ensure maximum efficiency. Projects are typically allocated to an application team. Variable sets are either assigned at the project level (for example for cost-codes, owners, and support contacts) and/or at the workspace level depending on requirements.

We recommend automated onboarding. This workflow should make use of either the HCP Terraform and Terraform Enterprise provider(opens in new tab) or the HCP Terraform API to automate the creation of the application team project and initial workspaces (such as those for development, test and production) and assign the necessary permissions to the teams. This will ensure that project allocation is done in a consistent manner. A landing zone concept can also be applied here.

We recommend assigning separate projects for each application within a business unit. The naming convention for projects is: <business-unit-name>-<application-name>.

Access management and permissions

Teams can be created without requiring any of the aforementioned organization-level permissions. Instead, permissions can be explicitly granted at the project and workspace levels. We recommend setting permissions at the project level as it offers better scalability and allows teams to have the flexibility to create their own workspaces when necessary. By granting permissions at the project level, you can effectively manage access control while providing teams with the necessary privileges for their specific workspaces. Below are the permissions you can grant and their respective functionalities:

- Read: Users with this access level can view any information on the workspace, including state versions, runs, configuration versions, and variables. However, they cannot perform any actions that modify the state of these elements.

- Plan: Users with this access level have all the capabilities of the

readaccess level, and in addition, they can create runs. - Write: Users with this access level have all the capabilities of the

planaccess level. They can also execute functions that modify the state of the workspace, approve runs, edit variables on the workspace, and lock/unlock the workspace. - Admin: Users with this access level have all the capabilities of the

writeaccess level. Additionally, they can delete the workspace, add and remove teams from the workspace at any access level, and have read and write access to workspace settings such as VCS configuration.

Variations on the standard roles are possible by creating your own custom permission sets and assigning those to the appropriate teams in your project. We recommend using workspace-level permissions to demonstrate segregation of duty for regulatory compliance, such as to limit access to production workspaces to production services teams, and the converse for application development teams.

Workspace

A workspace is the most granular container concept in HCP Terraform. They serve as isolated environments where you can independently apply infrastructure code. Each workspace maintains its own state file, which tracks the resources provisioned within that specific environment.

This separation allows for distinct configurations, variables, and provider settings for each workspace. By organizing your workspaces under their respective projects, you establish a clear and hierarchical relationship among different infrastructure components.

Workspace design

When designing workspaces, it is important to consider the following:

- Workspace isolation: Each workspace should be isolated from other workspaces to prevent unintended changes or disruptions. This isolation ensures that changes made to one workspace do not affect other workspaces.

- Workspace naming: We recommend following a naming convention for workspaces to maintain consistency and clarity. The recommended format, with a maximum of 90 characters, is as follows:

<business-unit>-<app-name>-<layer>-<env>. By incorporating the business unit, application name, layer, and environment into the workspace name, you can easily identify and associate workspaces with specific components of your infrastructure.

Naming convention

We recommend following a naming convention for workspaces to maintain consistency and clarity. The recommended format is: <business-unit>-<app-name>-<layer>-<env> where:

<business-unit>: The business unit or team that owns the workspace.<app-name>: The name of the application or service that the workspace manages.<layer>: The layer of the infrastructure that the workspace manages (e.g.,network,compute,data,app).<env>: The environment that the workspace manages (e.g.,dev,test,prod).

By incorporating the business unit, application name, layer, and environment into the workspace name, you can easily identify and associate workspaces with specific components of your infrastructure. We recommend a maximum of ninety characters but this is not a hard limit.

Many application teams will not need the layer component in the name as the infrastructure they need for their application is relatively simple. To standardize, the string main or app can be used in place of the layer component. This will help to maintain consistency across the organization.

When to divide a workspace

- Performance: Large Terraform graphs which have to process hundreds or thousands of objects for every plan/apply can increase time-to-market for application component change, and could result in out of memory errors if the graph grows larger than the RAM allocation from the container daemon in the case of Terraform Enterprise or can lead to network timeouts or API limits being reached. To mitigate these challenges we recommend these actions in descending order of preference:

- Split the configuration across a number of workspaces which will individually take less time to plan and have a smaller RAM footprint.

- Move the workspace run to an agent pool where a dedicated, bespoke, vertically-scaled specification can be made available to the workload.

- Privileges: Splitting up workspaces allows for access control refinement for different users of your organization, as referred to in the section above on projects division.

As Terraform runs begin with state refresh, with workspaces containing hundreds or thousands of resources, plan and apply commands take increased amounts of time. The Terraform CLI's parallelism limit controls how many resources can be checked in parallel during these operations. More information on this can be found in the Terraform documentation here(opens in new tab).

Landing zones

A cloud landing zone is a foundational concept in cloud computing and a common deployment pattern for scaled customers. Defined as a standardized environment deployed in a cloud to facilitate secure, scalable, and efficient cloud operations, it forms a blueprint for setting up workloads in the cloud and serves as a launching pad for broader cloud adoption.

Cloud landing zones address several important areas:

- Networking: Configured with the network resources, such as virtual private clouds, subnets, and connectivity settings needed for operating workloads in the cloud.

- Identity and access management: Helps to enforce IAM policies, RBAC, and other permissions settings.

- Security and compliance: Security configurations such as encryption settings, security group rules, and logging settings.

- Operations: This includes operational tools and services, such as monitoring, logging, and automation tools. These help to manage and optimize cloud resources effectively.

- Cost management: Enables cost monitoring and optimization by facilitating automation, tagging policies, budget alerts, and cost reporting tools.

Landing zones are commonly deployed by HCP Terraform, but the landing zone concept is also applicable to HCP Terraform by which a management workspace is deployed by the Platform Team during the onboarding of an internal customer using automation and which is then used to deploy the operational environment needed by that customer to support their development remit; this is akin to the equivalent CSP account and configuration needed by the same team. The recommended way to do this is:

- Complete preparative reading: Read about cloud landing zone patterns applicable to the CSPs with whom work partner.

- Define the HCP Terraform landing zone: There are a number of ways to apply the LZ concept, but core requirements include:

- Use of the Terraform Enterprise provider(opens in new tab) so that there is state representation for the configuration - this applies to both HCP Terraform and Terraform Enterprise.

- Definition of a VCS template which contains boilerplate Terraform code for the LZ and a directory structure designed and managed by the Platform Team.

- Creation of a Terraform module for your private registry - this will be called by the Platform Team's automated onboarding process when an application team wants to onboard. This module should nominally create the following:

- A management workspace for the application team.

- A VCS repository for the application team to store the code which will run in the management workspace. This will use the VCS repository template above.

- Variables/sets as needed for efficient deployment.

- Public and/or private cloud resources for the team to use as necessary.

- Augment your onboarding pipeline to make use of the module. The process should hook into other organizational platforms for credential generation and observability etc.

- Dedicate a HCP Terraform project to house the landing zone management workspaces separately from others the Platform Team own. Use the Terraform Enterprise provider or HCP Terraform API to deploy this. The onboarding process will direct creation of management workspaces into this project.

- Test: Create landing zones using the process

- Update the module and VCS template: Expect to do this a number of times before you are satisfied that the process scales. This is a key area which must not be underestimated. Depending on the onboarding design, if you onboard ten internal customers using the process, then need to change the process, this will apply from that point onwards for the next set of customers, but depending on the implementation, those already deployed may need to be updated manually such that standardization can be applied across all customers. This is also why it is important to funnel sets of early adopters onto the platform as you develop the solution.

- Ensure smooth onboarding: The process should be as smooth as possible for the application teams. If using Service Now as front door to the onboarding process, the Platform Team should liaise with the Service Now team as early as possible as there will be a lead time for remediation.

Landing zone workflow

Via the tasks above (irrespective of onboarding platform technology), the workflow can be summarized as follows:

- Ticket raised: Audit trail created, approval acquired as appropriate.

- Landing zone child module call code added: The top-level workspace and its VCS repository is where the Platform Team collect and manage onboarded teams.

- We recommend automating the addition of this child module call in order to ensure the solution is scalable. We expect your strategic orchestration technology would be used as part of this automation.

- If your business expects to onboard thousands of teams, then planning of the Platform Team landing zone deployment workspace should be handled more carefully to avoid performance issues with the workspace run as discussed at the end of the previous section.

- Run the top-level workspace: This will create the management workspace for the application team using the Terraform landing zone module.

- Application team management workspace created: The template VCS should contain Terraform code to use child modules to create the application team's workspaces. In a franchise model, the application team would have write access to this repository so that they can configure it to deploy all the required workspaces to support their needs. In a service catalog model, the management workspace would then also be run to create the application team's workspaces to hook into subsequent request processes for pre-canned infrastructure requests.

Note

While we have found many of our scaled customers have derived significant success scaling Terraform onboarding through the use of the landing zone concept, each customer implements them differently, and the above is a generalization of the process. We recommend that you engage with a HashiCorp Solution Engineer or Solution Architect to discuss your specific requirements. This topic is discussed in the other HashiCorp Validated Design Operating Guides.Post-installation tasks

An optional step at the end of automated installation of Terraform Enterprise is the creation of the Initial Admin Creation Token(opens in new tab) (IACT), but further initialization tasks are expected to complete setup. There are two viable options for post-provision configuration in Terraform Enterprise: API scripts or using the Terraform Provider. It is considered best practice to use the Terraform Enterprise provider in order to derive state for the configuration but all configuration can be accomplished via the API.

SSO and teams

The setup of SSO for HCP Terraform is not covered in detail in this document, as configurations can vary depending on the SAML 2.0-compliant provider used.

- You can find specific instructions for popular identity providers here(opens in new tab).

- For applicable SAML 2.0 documentation, here(opens in new tab).

We recommend automating the creation of teams alongside projects and workspaces using the Terraform Enterprise provider(opens in new tab). After creating the initial teams required, automate the addition of users within your strategic IdP platform. By adding a Team Attribute Name (default: MemberOf) attribute within your IdP, you can automatically assign users to the created groups in the SAML configuration. You can find further details here(opens in new tab).

Note: For user accounts used by pipelines these should be flagged in SAML using IsServiceAccount set to true. See isserviceaccount(opens in new tab). Teams must be created in Terraform Enterprise with the exact name of the group in the IdP. Do not create users in Terraform Enterprise manually - these are created automatically after the SAMNL assertion is processed login time.

Connect to a version control system

We recommend connecting HCP Terraform/Terraform Enterprise to your VCS provider in order to enable simple workflows for managing modules in the private registry, managing Sentinel or OPA policy-sets, and connecting VCS-backed workspaces to VCS repositories.

GitHub App

If your organization uses GitHub, the preferred method of VCS connection is a GitHub App, for a number of reasons: a GitHub App is not tied to a specific user, does not require a personal access token, and is safe to set up as a static VCS connection with no need to rotate tokens. A GitHub App connects Terraform to GitHub, but confers no access permissions itself. Instead, access to repositories is governed by the following.

- the GitHub organization(s) in which the GitHub App is installed.

- individual GitHub user permissions (to use the GitHub App, Terraform users must authorize it with their GitHub credentials).

See GitHub's documentation(opens in new tab) for more detail.

Terraform Enterprise supports a single site-wide GitHub App, which is shared across all Terraform Enterprise organizations. To use it, create and configure it(opens in new tab) using a site admin account.

Choose the appropriate level of visibility for your GitHub App from the following list.

- If you only have one GitHub organization, configure your GitHub App as private.

- If you have multiple organizations under the same GitHub Enterprise, create the App under your enterprise account(opens in new tab) (at the time of writing, this feature is in public preview).

- If you need access to repositories across multiple GitHub organizations, configure your GitHub App as public but do not list it on GitHub's marketplace.

HCP Terraform has a preconfigured public GitHub app(opens in new tab) which you can install in any GitHub organization(s) as required.

OAuth

For providers other than GitHub, VCS connections are authorized using OAuth within each Terraform Enterprise organization. They can be set up either by the manual UI workflow(opens in new tab) using an ID and secret from the VCS, or automated using the HCP Terraform and Terraform Enterprise provider(opens in new tab) using an access token from the respective VCS account. As with all static tokens, it is good practice to rotate this periodically.

OAuth authorizes Terraform Enterprise to act as a specific user or account on the VCS. Terraform's access to VCS organizations and repositories is governed by the permissions of that account. For simplicity we recommend setting up a single OAuth connection per VCS provider in each Terraform Enterprise organization. Use a service account with access to all IAC and policy-as-code repositories, to avoid any dependency on individual employee accounts.

If a more granular or least-privilege permissions model is required, you can create multiple OAuth VCS connections, each mapping a subset of VCS repositories to a subset of Terraform projects. In this case it would be a function of the landing-zone module described above to create these OAuth connections and project mappings(opens in new tab) on demand whenever a new project is onboarded.

Audit

In HCP Terraform only, the Audit Trails API(opens in new tab) provides access to audit events. Terraform Enterprise customers should forward logs(opens in new tab) for monitoring and auditing purposes. See the section on observability for more details. Audit, logging and monitoring should be included in the target architecture for the delivery of your project. Do not wait until after go-live to implement observability.

HCP Terraform agents

Pools of HCP Terraform agents allow HCP Terraform and Terraform Enterprise to communicate with isolated APIs in discrete network segments such as those on premise. We recommend defining agent pools and assigning workspaces to them using the Terraform Enterprise provider as appropriate. This improves the availability of your on-premise APIs and allows for configuration of network hardware, internal VCS, CI/CD systems etc. using IaC. This significantly improves the return on investment of HCP Terraform and Terraform Enterprise.

You may choose to run multiple agents within your network, up to the organization's purchased agent limit (which only affects HCP Terraform customers; there is no such limit for Terraform Enterprise customers). Each agent process runs a single Terraform run at a time. Multiple agent processes can be concurrently run on a single instance, license limit permitting.

We strongly recommend reading the HCP Terraform agent documentation(opens in new tab) as part of your implementation planning.

Compatibility

Agents currently only support x86_64 bit Linux operating systems. Agents support Terraform versions 0.12 and above only. Workspaces configured to use Terraform versions below 0.12 cannot select the agent-based execution mode.

When running Terraform Enterprise, consult the release notes(opens in new tab) for agent version restrictions for your target Terraform Enterprise version and also the HashiCorp considerations for HCP Terraform agents on Terraform Enterprise(opens in new tab).

Operational considerations

The agent runs in the foreground as a long-running process that continuously polls for workloads from HCP Terraform. An agent process may terminate unexpectedly due to a variety of reasons. We strongly recommend pairing the agent with a process supervisor to ensure that it automatically restarts in case of an error. On a Linux host, this can be done using a systemd manifest:

[Unit]

Description="HashiCorp HCP Terraform Agent"

Documentation="https://www.terraform.io/cloud-docs/agents"

Requires=network-online.target

After=network-online.target

[Service]

Type=Simple

User=tfcagent

Group=tfcagent

ExecStart=/usr/local/bin/tfc-agent

KillMode=process

KillSignal=SIGINT

Restart=on-failure

RestartSec=5

EnvironmentFile=-/etc/sysconfig/tfc-agent

[Install]

WantedBy=multi-user.target

If using HCP Terraform agents in containers, use single-execution mode(opens in new tab) to ensure your agent only runs a single workload and guarantees a clean working environment for every run.

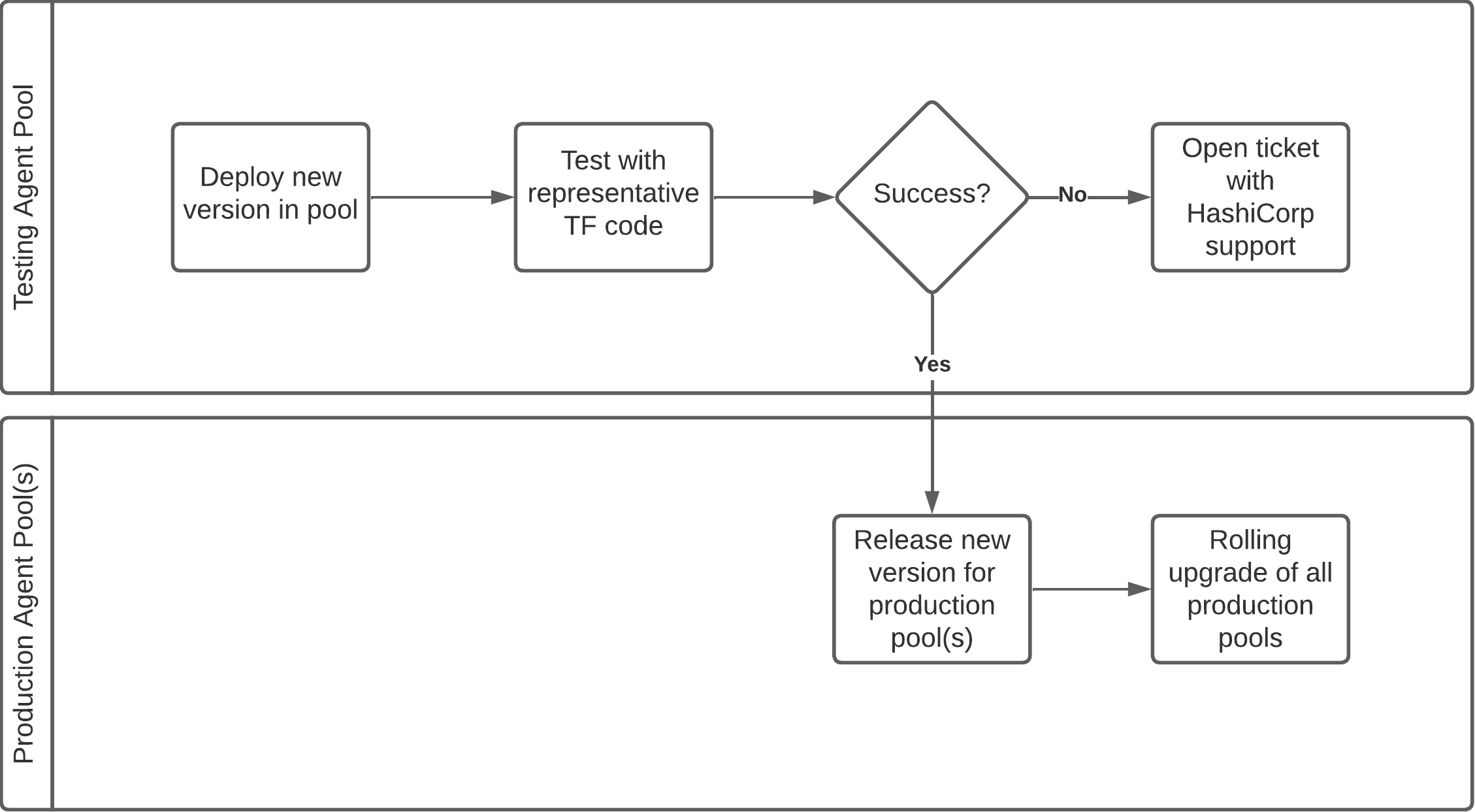

Upgrading HCP Terraform agents

When HashiCorp releases a new version of Terraform Enterprise, we test it against the most current HCP Terraform agent version. Should you need to upgrade your HCP Terraform agent version, we recommend testing the new version in a separate agent pool before rolling it out to your main agent pool(s). Use the Platform Team's dedicated HCP Terraform organization or Terraform Enterprise instance for this.

If you're considering upgrading to a HCP Terraform agent version released after your currently running Terraform Enterprise version, testing the new HCP Terraform agent is not a step that should be skipped, and the extent of the testing effort must match the extent of the change. Patches and minor changes require little testing, while major changes require more extensive tests.

The diagram below illustrates our recommended rollout approach for a new HCP Terraform agent version: