Service Discovery

Service discovery is the process of automatically detecting and locating services within your infrastructure. In the context of Nomad Enterprise, it allows applications and services to find and communicate with each other without hardcoding IP addresses and ports.

Service discovery is a more advanced form of DNS that goes beyond translating domain names into IP addresses. It provides additional capabilities such as real-time updates, health status information, metadata about services, load balancing, and support for dynamic environments.

- Real-time updates as services come online or go offline

- Health status information about services

- Metadata about services (e.g., version, capabilities)

- Load balancing across multiple instances of a service

- Support for dynamic environments where service locations frequently change

These advanced features make service discovery an essential component in modern distributed systems, enabling flexible and resilient application architectures.

In a shared service environment, where multiple teams deploy various applications and microservices, service discovery becomes crucial for several reasons:

- Services may be scaled up, down, or moved between hosts frequently. Service discovery ensures that clients can always locate the services they need, regardless of changes in the underlying infrastructure.

- Facilitates load balancing and enhances system resilience by allowing clients to discover all healthy instances of a service and distribute requests among them.

- Eliminates the need for manual reconfiguration or calling external systems when service locations change, such as an IPAM.

Note

While Nomad provides basic service discovery capabilities, these are limited to services running within the Nomad cluster itself. Nomad does not offer native support for external service discovery, ingress routing, or a DNS interface.To enable comprehensive service discovery and routing, including for external clients, you'll need to integrate Nomad with additional tooling that specialize in service discovery such as Consul, which provides robust service discovery, a DNS interface, and can be easily integrated with Nomad.

Health Checks

Health checking is a crucial component of service discovery and management in Nomad Enterprise. It ensures that only healthy, properly functioning service instances are available for discovery and are used by other applications. Service health checks are automated tests that Nomad performs to determine the status of a service.

Visit the Service discovery on Nomad tutorial for a guide how to implement it into your job file. The check and service documentation pages can also be used as reference for a detailed list on the various options available.

Nomad supports the following capabilities for native service discovery:

Note

[`provider = "nomad"`](https://developer.hashicorp.com/nomad/docs/job-specification/service#provider) must be specified as it defaults to Consul when using the `service` block.HTTP health checks

This example configures an HTTP health check for a web application. It sends a GET request to the /healthz endpoint every 10 seconds and times out after 5 seconds if no response is received.

service {

name = "web-service"

port = "http"

provider = "nomad"

check {

type = "http"

path = "/healthz"

interval = "10s"

timeout = "5s"

method = "GET"

}

}

If your application has multiple HTTP endpoints, you can use multiple HTTP service checks. Additionally, you can add in custom headers. This example shows two services:

- HTTP GET request to the

/healthzendpoint over port 8080 using aAuthorizationheader. - HTTP GET request to a

/v1/db-checkto ensure the API service is able to communicate with the database. - If both status return a

200code, then the health check will pass. If one fails, they both fail.

group {

network {

port "api" {

to = 8080

}

}

task "api" {

#...

service {

name = "api-service"

port = "api"

provider = "nomad"

check {

type = "http"

path = "/healthz"

interval = "10s"

timeout = "5s"

method = "GET"

header {

Authorization = ["Basic ZWxhc3RpYzpjaGFuZ2VtZQ=="]

}

}

}

service {

name = "db-ready"

port = "http"

provider = "nomad"

check {

type = "http"

path = "/v1/db-check"

interval = "10s"

timeout = "5s"

method = "GET"

}

}

Use multiple checks to verify different components or functionalities of your service and adjust intervals based on the importance and expected response time of each check.

Note

Any non-2xx response code is considered a failure. Tweak your application appropriately to respect this.TCP Health Check

Alternatively, you can use a TCP health check for services where a simple connection test is sufficient. Adjust the interval based on the criticality of the service and acceptable detection time for failures. This example attempts to establish a TCP connection to the label "endpoint" port defined in group.network{} every 10 seconds. The check fails if the connection isn't established within 5 seconds.

service {

name = "app"

port = "endpoint"

provider = "nomad"

check {

type = "tcp"

port = "endpoint"

interval = "10s"

timeout = "5s"

}

}

- If the application uses multiple ports, multiple service checks can be used. Remember, if one check fails, they both fail.

group {

network {

port "endpoint1" {

to = 8080

}

port "endpoint2" {

to = 9000

}

}

task {

#...

service {

name = "app_endpoint1"

port = "endpoint1"

provider = "nomad"

check {

type = "tcp"

port = "endpoint"

interval = "10s"

timeout = "5s"

}

}

service {

name = "app_endpoint2"

port = "endpoint2"

provider = "nomad"

check {

type = "tcp"

port = "endpoint2"

interval = "10s"

timeout = "5s"

}

}

#...

}

}

Upgrades

Health checks can also be used during upgrades to ensure the application is healthy before promoting it. When health_check = "checks" within the update block then the service checks of the tasks will be respected when performing an upgrade. If the health check fails, then a roll back will occur.

job {

update {

health_check = "checks"

auto_revert = true

}

group "frontend" {

count = 3

network {

port "http" {

to = 8080

}

}

task "web" {

service {

name = "web-service"

port = "http"

provider = "nomad"

check {

type = "http"

path = "/healthz"

interval = "10s"

timeout = "5s"

method = "GET"

}

}

}

}

}

Leveraging Service Discovery in Tasks

In order for services to communicate with each other without having to know their IP address or DNS hostname you will need to leverage the template feature. Templates along with service discovery, offers a way to dynamically configure tasks based on the current state of your infrastructure. These templates are initially rendered when a task starts and can be set to update either periodically or in response to specific events, ensuring your tasks always have the most up-to-date service information.

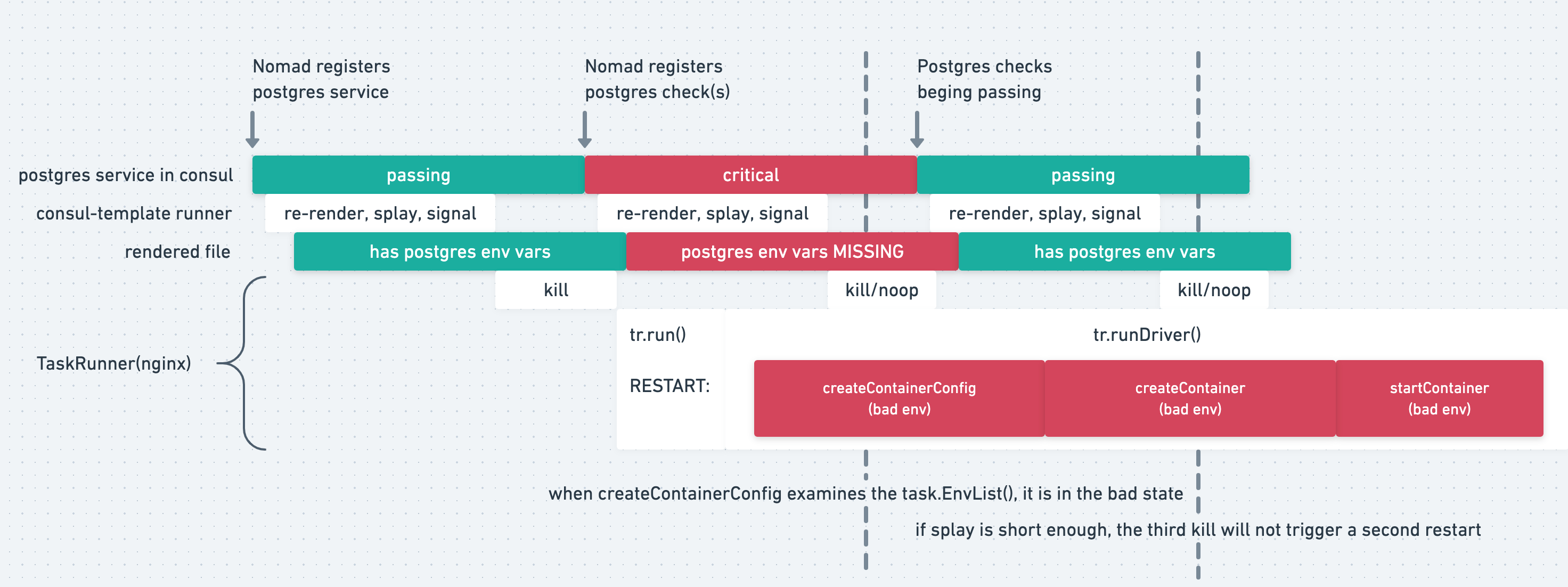

For service discovery, the data that is dynamically retrieved are the IP address and port of another task. Here is an example diagram to visualize the templating flow:

Let's look at two different tasks that need to communicate with each other:

App1 has a dependency on App2 and is using a hardcoded IP as an environment variable. This will not scale, will break once App2 is scheduled on a different host, and will pin traffic to one instance if there are multiple instances. This poses additional problems if you are using dynamic ports with app2 which can also change whenever restarted or rescheduled.

job "app1" {

group "app1group" {

network {

port "app1" {}

}

task "server" {

env {

APP2_IP = "172.16.30.5"

}

service {

name = "app1"

port = "app1"

provider = "nomad"

}

}

}

}

job "app2" {

group "app2group" {

count = 3

network {

port "app2" {}

}

task "server" {

service {

name = "app2"

port = "app2"

provider = "nomad"

}

}

}

}

To fix this, we can put the environment variable as a template to ensure we always have the correct IP and port of App2.

job "app1" {

group "app1group" {

network {

port "app1" {}

}

task "server" {

template {

data = >>EOH

{{ range nomadService "app2" }}

APP2_IP = {{ .Address }}:{{ .Port }}

{{ end }}

EOH

destination = "local/vars.env"

env = true

}

service {

name = "app1"

port = "app1"

provider = "nomad"

}

}

}

}

By default, if there is a change to the IP address or Port of App2, Nomad by default will automatically restart the App1 task. The change_mode can be set to determine what action occurs when a change is detected with the rendered template.

Templating is a powerful tool which allows a lot of creativity and flexibility for your workloads.

Visit template documentation page for more information.