Terraform: Integration Guide for HCP Packer

| HashiCorp Products | HCP Terraform, Terraform Enterprise, HCP Packer |

|---|---|

| Partner Products | N/A |

| Maturity Model | Standardizing |

| Use Case Coverage | Run Task, HCP Better Together |

| Tags | Run Task, Image Management |

| Guide Type | HVD Integration Guide |

| Publish Date, Version | April/25/2024, Version 1.0.0 |

| Authors | Randy Keener, Prakash Manglanathan, Mark Lewis |

Introduction

Note

In this document we will default to HCP Terraform. HCP Terraform (SaaS) is similar to Terraform Enterprise (Self Hosted) in terms of functionality unless otherwise mentioned.

This guide aims to provide platform teams with prescriptive guidance on establishing an integration between HCP Packer and HCP Terraform.

Target audience

- Platform Team responsible for HCP Terraform

- Platform Team responsible for golden machine images (Packer Team)

- Infrastructure security engineers

Benefits

- Application Team developers are able to quickly provision images that conform to organizational image standards.

- Both the Platform and Security Teams have good visibility into what images are being provisioned and be able to manage and communicate change of images if a vulnerability is detected or the base image has become outdated.

Prerequisites and limitations

Before starting on this guide, we recommend that you have reviewed the following:

- Terraform Enterprise Solution Design Guide(opens in new tab) (for Self Hosted Customers)

- Terraform Operating Guide - Adopting(opens in new tab)

- Packer Documentation(opens in new tab)

- Packer Tutorials(opens in new tab)

Access requirements

- Admin access to HCP Packer

- Admin access to HCP Terraform

- Create API Token docs(opens in new tab)

- Admin access to VCS repository

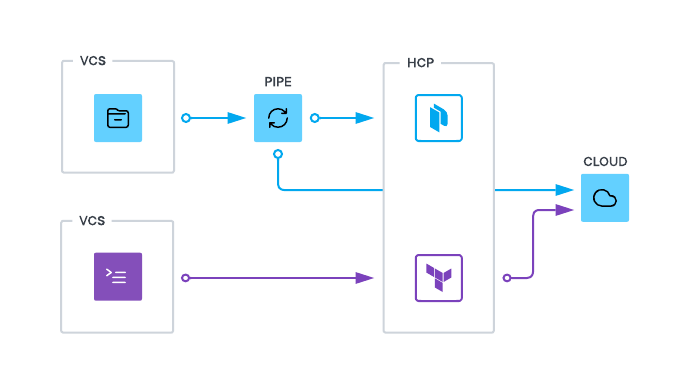

Integration architecture

The main components of this integration are:

- VCS repository: This is where Terraform and Packer code will be stored and collaborated on. The VCS repository should have connectivity to HCP Terraform in addition to the Packer build pipeline (CI/CD).

- CI/CD pipeline: The build pipeline will be necessary to execute Packer builds. The Pipeline will need to have access to both HCP Packer and the cloud provider where the images are being built and stored.

- HCP Terraform: This is where the Terraform code will be executed. The Terraform code will be used to provision the infrastructure (e.g. VMs) where the images will be deployed. HCP Terraform will need to connect to HCP Packer to get the image metadata. This is in addition to the integrations with VCS and the cloud provider.

- HCP Packer: This will where image metadata will be stored. The machine images are not stored in HCP Packer.

Note

If you are using Terraform Enterprise and/or HCP Terraform Agents, you will need to ensure that your Terraform Enterprise instance, the Agents and the CI/CD workers can reach the HCP Packer API Endpoint. The HCP Packer API endpoint is https://api.cloud.hashicorp.com(opens in new tab).

We do not cover details of other components such as SSO, logging and SCIM tooling that are not core parts of this integration.

Prescriptive guidance

Our objective in these HashiCorp Validated Designs is to give you prescriptive, best practice guidance based on our experience partnering with numerous organizations who have implemented HCP Terraform with HCP Packer.

People and process

The Terraform Operating Guides contain extensive discussions on people and process recommendations when onboarding HCP Terraform or Terraform Enterprise. In this section we describe in brief the core teams and their roles and responsibilities as relating to managing this particular integration.

Platform team

In the context of this integration the Platform Team will be responsible for the following:

- Ensuring that the integration between HCP Terraform and HCP Packer is established.

- Ensuring that the appropriate Terraform workspaces are assigned the HCP Packer run task.

- Set up periodic collaboration meetings with the Security Team to ensure that the integration's objectives are being met.

- Enable the run task for all or selected workspaces. Also enable drift detection on the relevant workspaces.

- Enable HCP Terraform Notifications on Workspaces and ensure that the right channels (Email groups, Slack etc) receive notifications.

- Set expectations for the application teams now that the run task is enabled for their Terraform runs:

- Run tasks involve additional processing which means that this may slightly increase the wait time for application teams.

- Workspace runs that worked before can potentially be blocked by the run task and would have to be remediated.

- Ensure documentation is in a centralized location for the application team regarding this integration.

- Provide enablement/training to the platform team on how to use the HCP Packer.

- Update modules and CI/CD pipelines with changes to connect with HCP Packer.

Object planning

HCP Packer has a object hierarchy that requires careful planning and access control. We recommend that a single HCP organization be used with the HCP Packer objects in a single project dedicated for platform team use.

HCP Packer registry: This is the top level object in HCP Packer. We recommend that there is a single consolidated registry in an organization. For Larger organizations, it may be necessary to have multiple registries but some functionality such as ancestry tracking(opens in new tab) might be lost. HCP Packer bucket: This is where the image metadata is stored. We recommend that there is a separate bucket for each application or team. Use a consistent naming convention for the buckets for e.g. images. The Platform Team is responsible for creating the buckets and assigning the appropriate permissions.

We recommend a naming convention such as <bu>-<appname>-images for the buckets where:

<bu>is the business unit.<appname>is the application name.

HCP Packer channels: Channels are used to manage the lifecycle of the images. We recommend that there are at least 3 channels - development, test and production. The Platform Team is responsible for creating the channels and should ensure consistent naming conventions are used across all buckets.

Workflows

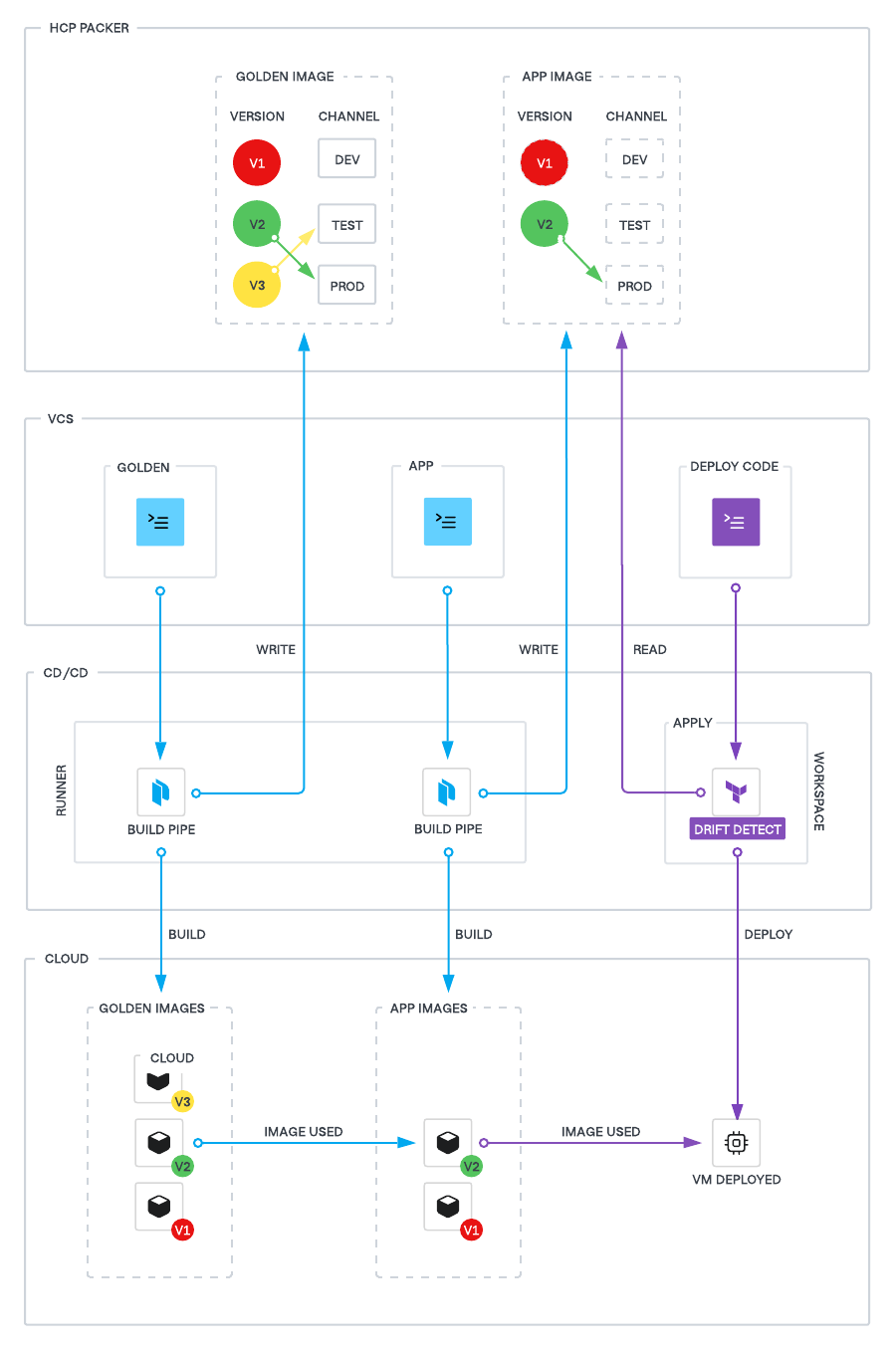

The following diagram illustrates how the various components interact to enable the workflows in the context of this integration.

Workflow 1: Golden image build workflow

The group within the Platform Team responsible for creating the golden images performs this workflow. The output is an organizational golden image, also known as a base image or first layer image.

- Golden image Packer code is stored in Packer repository (VCS).

- The image code has reference to the HCP Packer registry to ensure metadata is stored. More details here(opens in new tab).

- Any change to the packer code is performed via a PR process in VCS.

- The CI/CD pipeline is triggered on PR merge.

- The CI/CD pipeline executes the Packer build. This will build the image in the cloud provider and store the metadata in the HCP Packer registry as a new version.

- The new Packer version is assigned to an HCP Packer channel used for testing and validation

- Images generated are scanned for vulnerabilities and compliance (this should use your existing tooling for image scanning).

- Once the testing is complete, the image is promoted to the production channel and the image itself stored on the cloud (public or private).

Workflow 2: Application image build workflow

We recommend application or infrastructure teams create application-specific machine images, also known as a second layer image or phoenix image(opens in new tab). This ensures that images are immutable which improves security.

- The Platform Team is responsible for creating the repository and pipeline that the application teams will use to build their images even if the process is shifted left.

- The workflow will be triggered whenever the golden image is updated or a new version of the application is available.

- When the pipeline executes, a new image is built based on the golden image and the application binary. A new version is created in the HCP Packer registry under the application specific bucket.

- The application team will assign the new version to a channel for testing and validation.

- Once the new image is validated, the image is promoted to the production channel.

Workflow 3: VM/Infrastructure workflow

The Application Team uses Terraform to provision infrastructure using the images created in the previous workflow. This workflow is detailed in the Terraform Operating Guide in more detail. This is the standard GitOps based workflow that is recommended for provisioning any infrastructure using Terraform.

Workflow 4: Image remediation workflow

This workflow can be triggered by the following scenarios:

- The Security Team has detected a vulnerability in the image.

- The Platform Team wants to update the golden/base image to a new version.

- The Application Team needs to update the application binary or other details in the application image.

- The Security Team will notify the Platform Team of the vulnerability. The Platform Team will then update the Packer code with the patch and rebuild the golden image. The new version will be assigned to the Test channel. After testing, the production channel will be updated and the version with vulnerabilities will be revoked.

- This update will trigger downstream image builds and notifications to the relevant teams. The Application Teams will need to perform testing and validation before promoting the image to production.

- Existing versions of application images are deprecated and removed from the production channel.

- HCP Terraform's built in drift detection will detect any infrastructure using revoked images and will create a notification for the Application Team to create a new plan/apply to update the infrastructure.

Please see Revoke and Restore(opens in new tab) document for more details.

Note

If you are looking to automate the process of generating application images from a new golden image, and have hundreds of images to automate, design the workflow so that the application image builds proceed in batches rather than simultaneously, in order to avoid a build storm which may saturate the network. Please see Revoke and Restore(opens in new tab) docs for more details.

Steps

Step 1: Connect Packer to HCP Packer

Please follow this tutorial(opens in new tab) for more details on the steps involved.

At the end of the steps you should have the following:

- Packer registry is created.

- Packer bucket is created.

- Packer channels are created.

- HCP Packer is integrated with the Packer code so that on build the metadata is stored in the HCP Packer registry.

For more details on connecting Packer to HCP Packer see documentation(opens in new tab). To test this step - ensure that when you run the packer build, a new version is created in HCP Packer.

Step 2: Update Terraform code to use HCP Packer images

The HCP provider enhances Terraform capabilities by offering specialized data sources that allow for the retrieval of critical metadata from HCP services. These data sources are pivotal for dynamic configuration management in Terraform scripts.

- hcp_packer_version data source: Retrieves version metadata from a specified channel within HCP Packer.

- Usage: This data source is used to fetch details about specific versions of software or artifacts, ensuring that the infrastructure deploys with the correct configurations and versions specified.

- hcp_packer_artifact data source: Uses a version fingerprint and channel name to retrieve detailed metadata and the location of an image from HCP Packer.

- Usage: Essential for operations that require precise information about the artifacts for controlled deployments.

More details on the HCP provider and the data sources can be found here(opens in new tab).

Example code to show how the HCP data sources can be used in Terraform:

# AWS configuration

data "hcp_packer_artifact" "aws_web_server" {

bucket_name = "aws-web-server-images"

channel = "production"

}

resource "aws_instance" "web" {

ami = data.hcp_packer_artifact.aws_web_server.id

instance_type = "t3.micro"

# Additional configuration...

}

# Azure configuration

data "hcp_packer_artifact" "azure_web_server" {

bucket_name = "azure-web-server-images"

channel = "production"

}

resource "azurerm_linux_virtual_machine" "web" {

name = "web-server"

# Use the image ID from HCP Packer

custom_image_id = data.hcp_packer_artifact.azure_web_server.id

# Additional configuration...

}

Note

Ensure that the workspace has the HCP Packer token set in a global variable set. This token is used to authenticate with the HCP Packer API.

Step 3: Configure Run Task in HCP Terraform

Please follow instructions in the tutorial(opens in new tab) to integrate HCP Packer with HCP Terraform. We recommend that this integration is enabled globally(opens in new tab).

As with any run task, we recommend that you test the run task in non-production workspaces first before enabling it in production. We further recommend that a phased approach is used where the run task is enabled globally as an advisory enforcement before enabling it as a mandatory enforcement.

Step 4: Configure Sentinel

This is an optional but recommended step. We recommend that you use Sentinel to enforce image use policies in addition to the run task integration. Some of the recommended policies include the following.

- A policy to check and ensure only images from HCP packer are used in the Terraform runs.

- A policy to enforce that only images from the production channel are used in the Terraform runs. Sample policy is below.

import "tfe"

// Define the required channel for HCP Packer images

required_channel = "Production"

// Fetch the image metadata from workspace variables

image_metadata = tfe.workspace.variables["hcp_packer_image_metadata"].value

// Main rule to enforce the channel requirement

main = rule {

required_channel in image_metadata

}

Note

Sentinel Policy sets allow workspaces to be excluded from the policy. This is useful for workspaces that are used for testing and development.

Without enabling the above mentioned Sentinel policies, there is a risk that non-compliant images can be used in the Terraform runs. This can lead to security vulnerabilities and compliance issues. As with any Sentinel policy, we recommend that you test the policy in non-production workspaces as advisory enforcement before enabling it in production.

Step 5: Ensure drift detection and notifications are enabled

Ensure that drift detection is enabled on all workspaces or on workspaces that are used to provision images. This will ensure that any non-compliance will be detected and reported in HCP Terraform's workspace explorer. We recommend that workspace notifications are enabled as well. This will ensure that application teams will be alerted when the image is revoked or when a new image is available.

Step 6: Test the key workflows

Test the key workflows that are detailed in the workflows section. Ensure that the golden image workflow, application image workflow and the infrastructure revocation workflow are working as expected.